Question: Can you recommend a solution to reduce toxic behavior in online gaming communities?

Modulate

If you want to cut down on toxic behavior in your online gaming community, Modulate has a full-featured solution with ToxMod, an AI tool that scrutinizes voice chat conversations, flags problems and sends them to moderation tools. It can integrate with existing player reporting tools and works in multiple languages. Modulate is designed to comply with regulations like GDPR and COPPA, and it offers enterprise-level support, so it should be good for AAA studios and indie developers, too.

Getgud

Another tool worth a look is Getgud, an AI-powered observability platform. It offers real-time visibility into game and player behavior, including toxic behavior. It can take automated actions for particular groups of players, and it can replay matches so you can see what happened. Getgud can handle different types of games and platforms, and it offers flexible data plans for different needs.

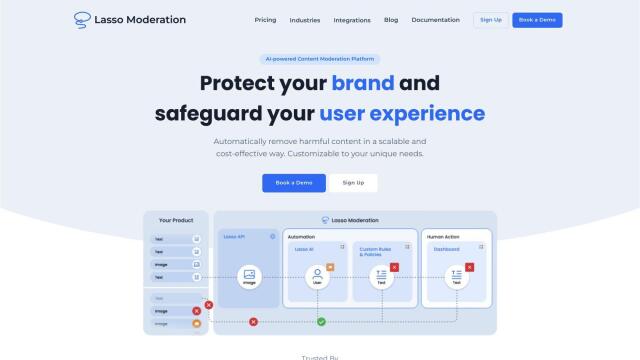

Lasso

For broader content moderation, Lasso automates up to 99% of moderation work for text, images and video. It's got a customizable dashboard and regulatory compliance, so it can be used in a variety of situations. Lasso is used in many industries besides gaming, so it's got a more general-purpose approach to moderation for particular content challenges.

Ansy

Last, Ansy is an AI chatbot that can be integrated with Discord and Slack to automate community FAQs. It learns from user interactions and can offer contextually relevant answers, helping to free up moderators' time. Ansy's continuous learning and security features make it a good way to improve community engagement and support.