Question: I'm looking for a platform that simplifies the development and deployment of Retrieval-Augmented Generation systems.

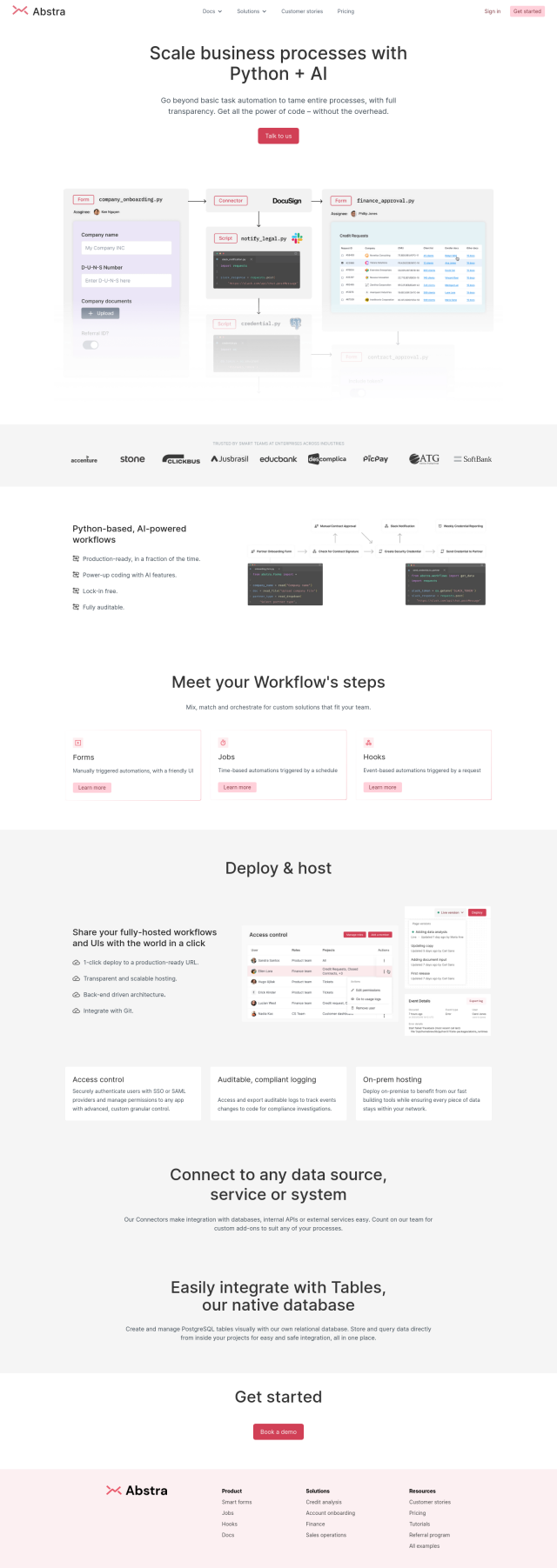

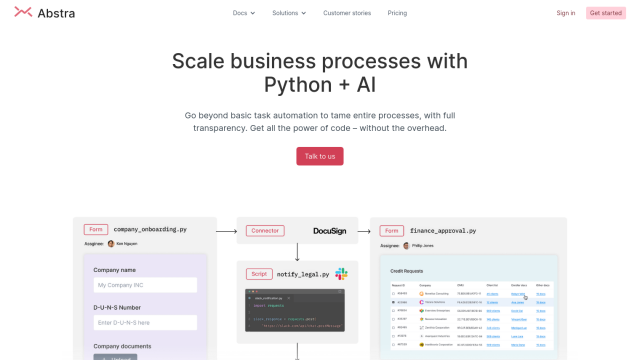

SciPhi

If you need a platform to streamline the development and deployment of Retrieval-Augmented Generation (RAG) systems, SciPhi is a good option. It offers a wide range of features, including flexible document ingestion, powerful document management and dynamic scaling. SciPhi supports deployment of state-of-the-art methods like HyDE and RAG-Fusion and can be connected to GitHub, deployed to cloud or on-prem infrastructure using Docker. It is open-source with lots of documentation and a large community of developers.

Abacus.AI

Another option is Abacus.AI, which lets developers create and run large-scale AI agents and systems. It includes ChatLLM for creating full-stack RAG systems and fine-tuning large language models (LLMs). Abacus.AI also can be used for automating complex tasks, real-time forecasting and anomaly detection. It's designed to ease AI integration with features like high availability, governance and compliance, making it a good option for enterprise environments.

LangChain

LangChain is another option worth considering, especially if you need context-aware and reasoning applications. It offers tools to create, monitor and deploy LLM-based applications. LangChain supports a variety of document formats and retrieval algorithms, so it can be used in a variety of industries like financial services and FinTech. With a wide set of RAG building blocks and more than 100,000 practitioners using the platform, LangChain is a good option for teams that want to increase operational efficiency and personalization.

GradientJ

If you need a full-stack option, GradientJ is a unified environment for developing and managing Large Language Model (LLM) applications. It offers tools for ideation, development and management of LLM-native applications, as well as a team collaboration mechanism for managing multiple applications. GradientJ supports a variety of use cases, including smart data extraction and safe chatbots, and makes it easier to develop and maintain complex AI applications.