Question: I need an LLM platform that supports data processing and code completion without any restrictions, what are my options?

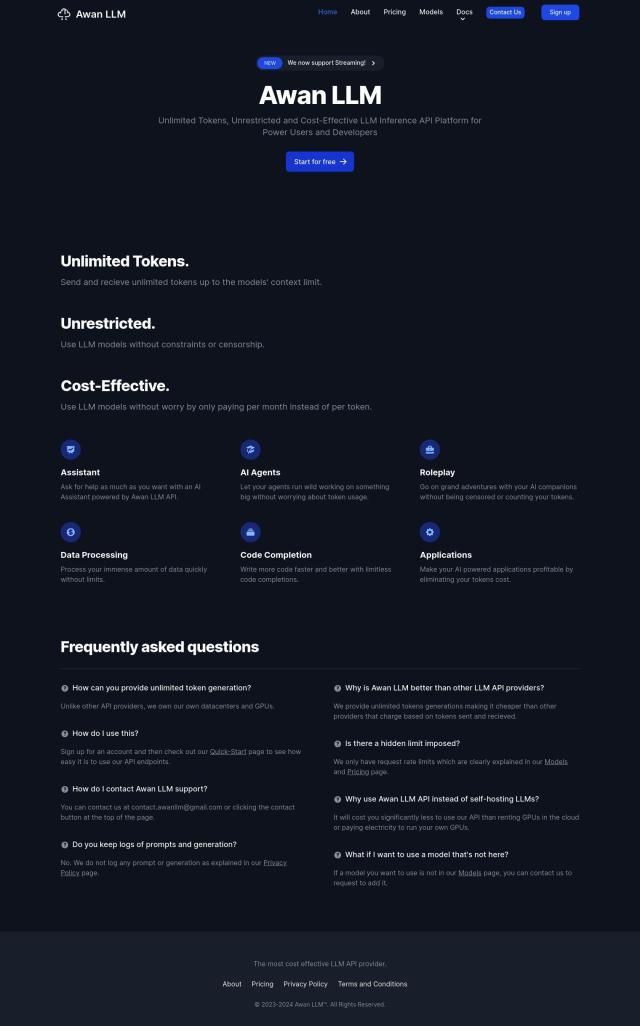

Awan LLM

Awan LLM is notable for unlimited tokens, no limits on model usage, and pay-as-you-go pricing. It offers AI assistant, AI agents, roleplay, data processing and code completion. It supports a range of models, including Meta-Llama-3-8B-Instruct and Llama 3 Instruct, and can be integrated with API endpoints. Awan LLM also prioritizes user privacy. It's a good all-purpose option.

Zerve

Another option is Zerve, which lets you host and control GenAI and LLMs in your own infrastructure for better control and faster deployment. It combines open models with serverless GPUs and your own data, supporting Python, R, SQL, Markdown and more. Zerve also lets you self-host on AWS, Azure or GCP so you can control data and infrastructure.

Lamini

For enterprise-scale needs, Lamini offers a platform to build, manage and deploy LLMs on your own data. It supports memory tuning, deployment in different environments and high-throughput inference. You can install Lamini on premises or in the cloud, and it supports AMD GPUs. That makes it a good option for managing the model lifecycle.