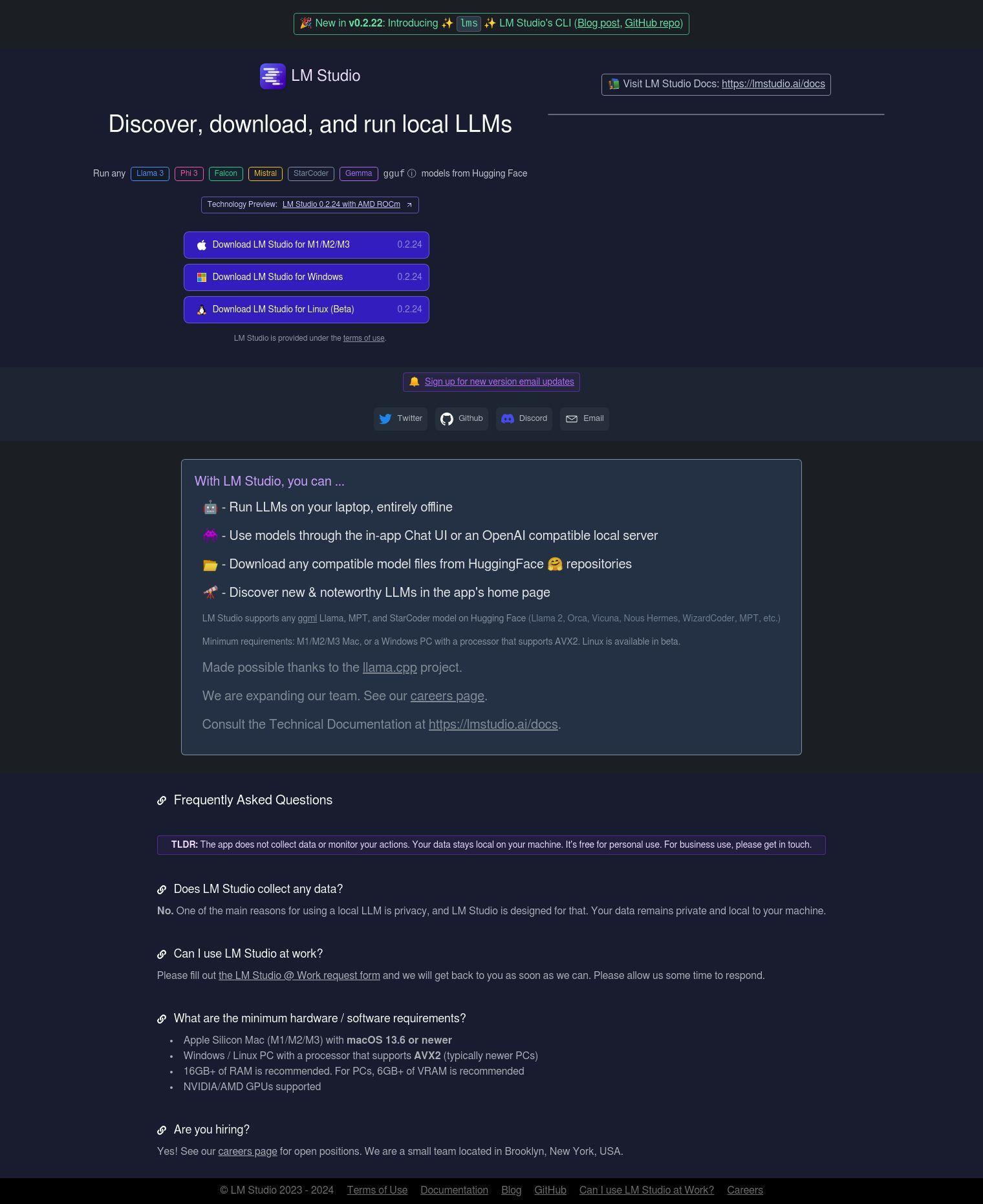

LM Studio is a desktop app that's designed to be easy to use for experimenting with local and open-source Large Language Models (LLMs). The cross-platform app lets you download and run any Hugging Face-compatible model, with a simple and powerful model configuration and inference user interface. It takes advantage of your GPU when available for better performance.

Some of the key features of LM Studio include:

- Run any ggml-compatible model from Hugging Face: The app supports a wide range of models, including Llama 3, Phi 3, Falcon, Mistral, and StarCoder.

- Offline usage: Run LLMs on your laptop without an internet connection.

- Model configuration and inference: A simple UI for configuring and running models.

- GPU support: Leverages your GPU for better performance.

- Discover new models: See interesting LLMs right on the app's home page.

LM Studio is free for personal use and doesn't collect any data, so you can use it without worrying about your privacy or data security. For commercial use, you can apply for access through the LM Studio @ Work request form. The app is available for Mac, Windows and Linux (beta) and requires a processor that supports AVX2. We recommend at least 16GB of RAM and 6GB of VRAM for best performance.

LM Studio also comes with a command-line interface tool called lms, which lets you load, unload and manage models, as well as inspect raw LLM input. lms can be used to automate and debug workflows, making it a great tool for developers.

Published on June 14, 2024

Related Questions

Tool Suggestions

Analyzing LM Studio...