Question: I'm looking for a user-friendly interface to configure and run language models on my local machine, do you know of any solutions?

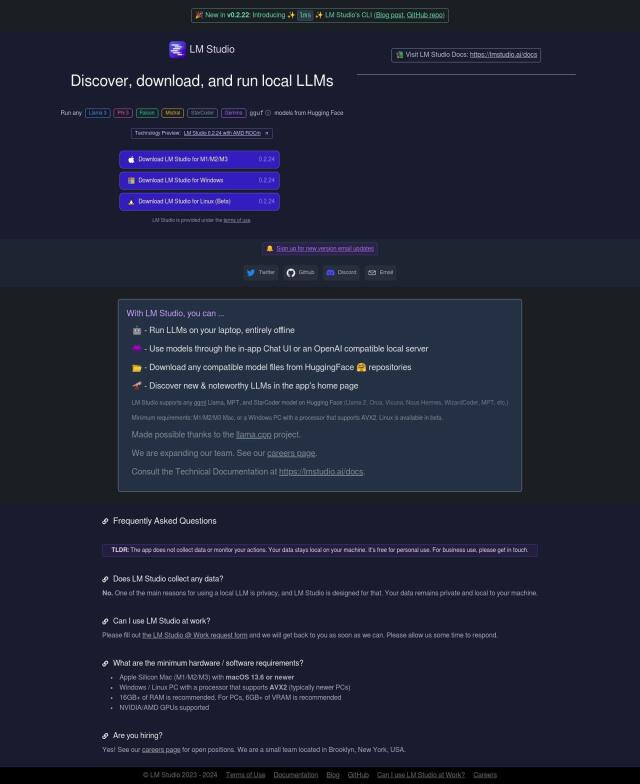

LM Studio

If you want a graphical interface to set up and run language models on your own machine, LM Studio is a good option. It's a cross-platform desktop app that supports several Hugging Face-compatible models and runs even when offline. The app's graphical interface lets you set up models and run inferences on your machine, taking advantage of your GPU for speed. LM Studio is free for personal use and doesn't collect data, so it's a good option for privacy. It's available for Mac, Windows and Linux (in beta) and comes with a command-line interface tool for automating and debugging workflows.

Langfuse

Another option is Langfuse, an open-source platform for debugging, analyzing and iterating on large language models. It has a wide range of features like tracing, prompt management, evaluation and analytics. Langfuse can be integrated with different SDKs and platforms for flexibility and has SOC 2 Type II and ISO 27001 certifications for security. It can be self-hosted and offers different pricing tiers for hobbyists and enterprises.

Dify

If you want to build generative AI apps, Dify offers a visual Orchestration Studio and customizable LLM agents. It includes tools for designing AI apps, secure data pipelines and prompt design. Dify also offers on-premise options for reliability and data security, so it's a good option for businesses and individuals. With different pricing tiers, it's good for everything from free sandbox access to enterprise-level options.

LLMStack

Last, LLMStack is an open-source platform that lets developers build AI apps using pre-trained language models. It includes a no-code builder for connecting LLMs to data and business processes, and supports vector databases for efficient data storage. LLMStack can be run in the cloud or on-premise, so it's good for different use cases like building chatbots and AI assistants.