Question: Can you recommend a tool that supports a wide range of Hugging Face-compatible models for natural language processing tasks?

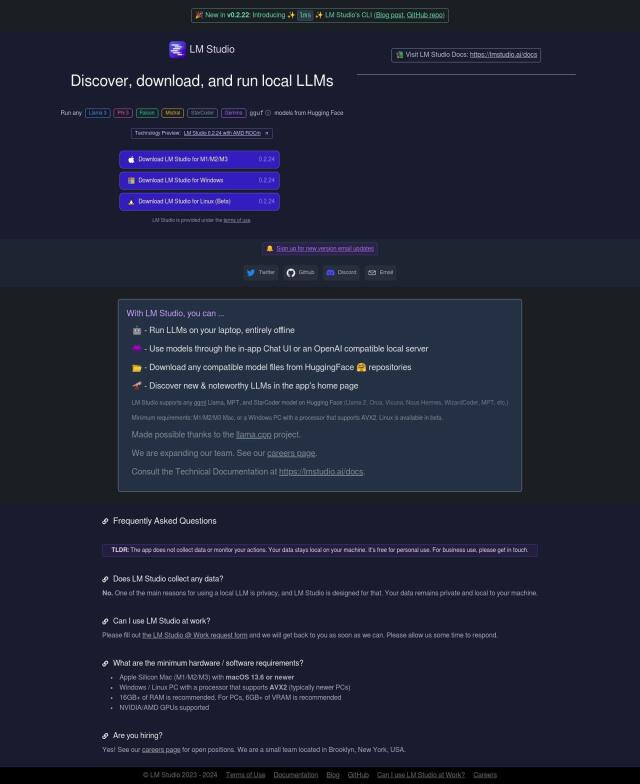

LM Studio

If you're looking for a tool that can run a broad variety of Hugging Face-compatible models for natural language processing tasks, LM Studio is a great option. This cross-platform desktop app lets you run local and open-source Large Language Models (LLMs) in a graphical interface, including models like Llama 3, Phi 3, and Mistral. It's available for Mac, Windows and Linux, and can run offline, making it a good option for a variety of NLP tasks. LM Studio also includes a command-line interface tool for managing and inspecting models, so it's a good option for automating workflows.

LLM Explorer

Another powerful option is LLM Explorer, which offers access to a gargantuan library of 35,809 open-source LLMs and Small Language Models (SLMs). You can filter and compare models by parameters, benchmark scores and memory usage, which makes it easier to find the right model for a particular job. The site also keeps track of recent additions and trends, so you can keep up with the latest language model developments.

LLMStack

If you're looking to build an AI app, LLMStack is a powerful open-source foundation. It can incorporate a variety of data files and pre-trained language models from companies like OpenAI and Hugging Face. With its no-code builder, developers can build complex AI apps even if they don't know how to program. LLMStack also supports vector databases and multi-tenancy, so it's good for everything from chatbots to workflow automation.

Predibase

Last, Predibase is good for developers who need to fine-tune and serve LLMs. It supports a variety of models, including Llama-2 and Mistral, and offers relatively inexpensive serving infrastructure with free serverless inference for light use. Predibase also offers enterprise-grade security and supports dedicated deployments, so it's a good option for production use.