Question: Is there a free, privacy-focused app that leverages GPU power to run language models for personal projects and research?

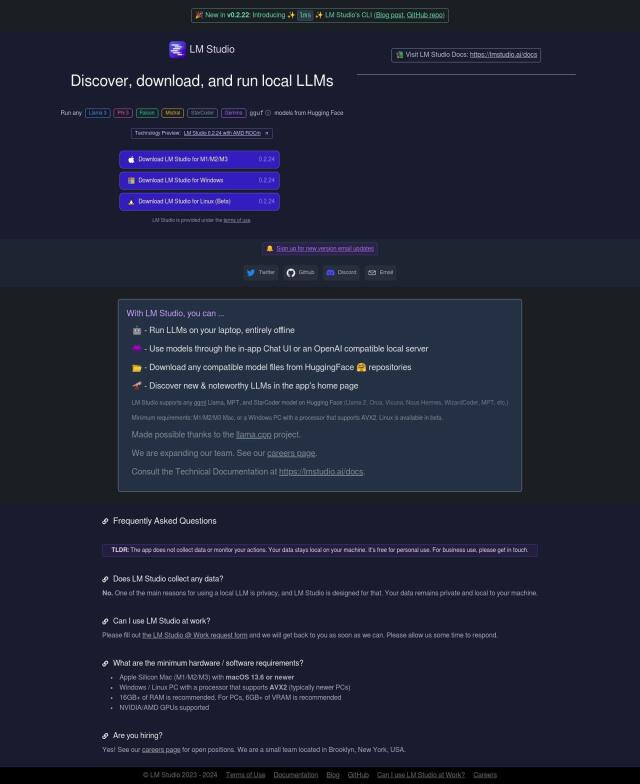

LM Studio

For a free, privacy-focused app that taps into your GPU to run language models, LM Studio is a great option. This cross-platform desktop app lets you run local and open-source Large Language Models (LLMs) in a graphical interface, using your GPU for speed. It supports several models, including Llama 3 and Phi 3, and works offline without compromising data security. LM Studio is free for personal use and has a command-line tool for automating and debugging workflows, so it's good for research and personal projects.

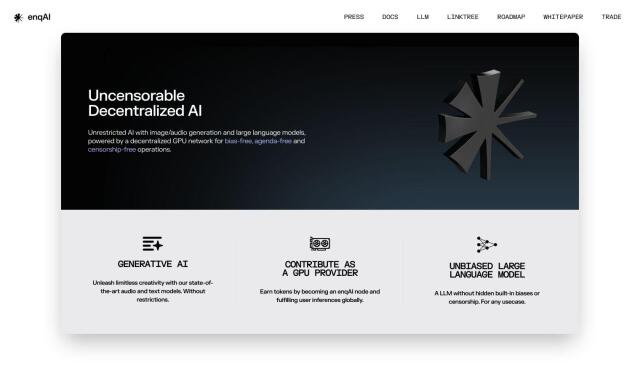

enqAI

Another interesting option is enqAI, a decentralized AI platform that uses a decentralized GPU network for bias-free, agenda-free and censorship-free operations. It offers unrestricted generative AI capabilities and comes with a proprietary large language model, Eridu. Users can participate as GPU providers, earning tokens by running an enqAI node, so it's an interesting option for those who want to participate in the network while benefiting from its services.

Private LLM

For those with a mobile focus, Private LLM is a local AI chatbot for iOS and macOS. It supports a variety of open-source LLM models and works offline for full privacy. The app offers features like customizable system prompts, integration with Siri, and advanced quantization for better model performance. It's a one-time purchase product for individuals and families who want a private AI assistant without a subscription or network connection.