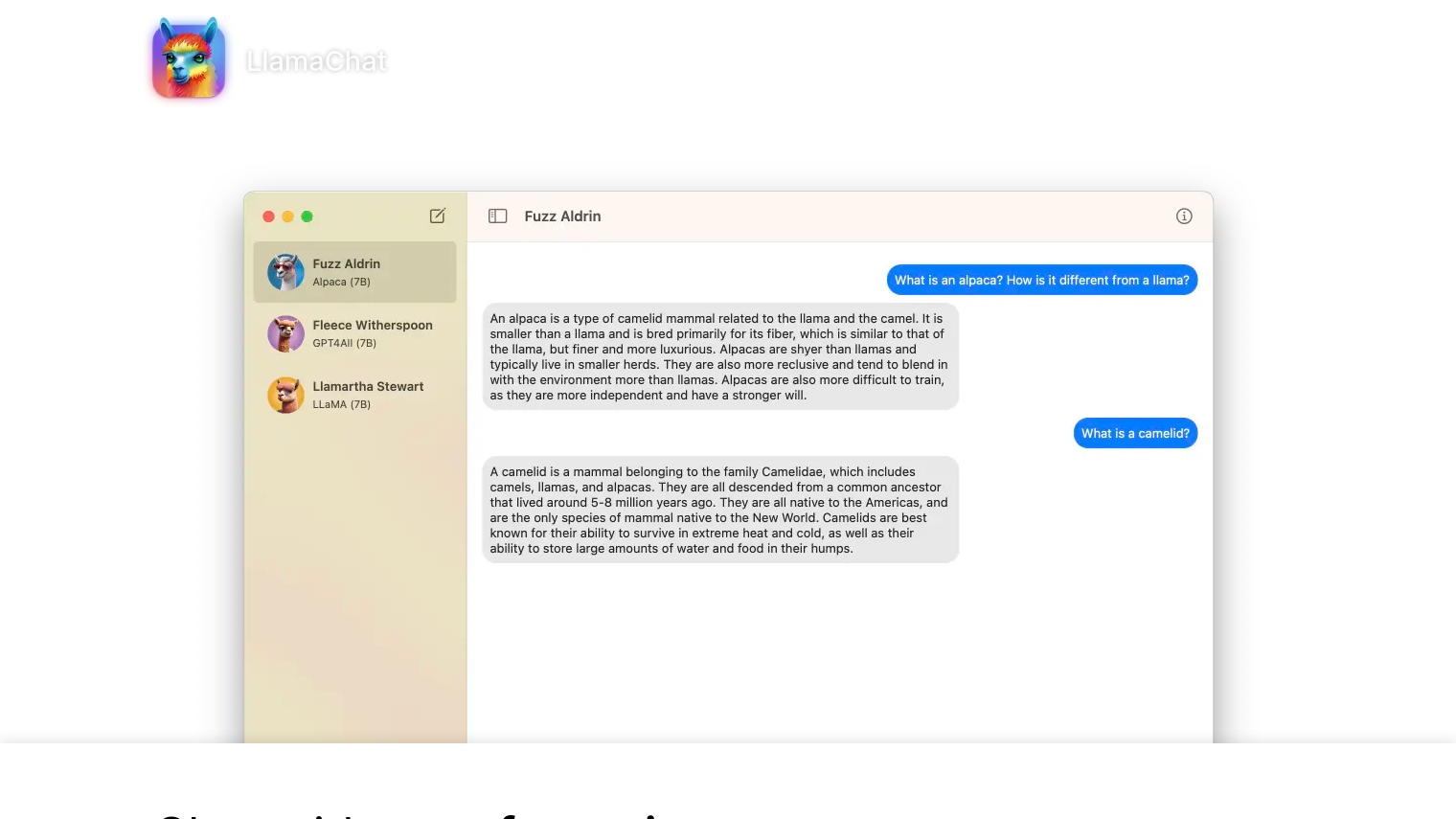

LlamaChat lets you chat with a variety of LLaMA-based AI models, including Alpaca, GPT4All and LLaMA itself, right on your macOS machine. That's a useful interface for running the models locally, which can improve the chatbot experience.

Among LlamaChat's features are:

- Model Support: Alpaca, GPT4All and LLaMA are supported, with Vicuna and other models to come.

- Easy Model Conversion: You can import raw PyTorch model checkpoints or pre-converted .ggml files.

- Open-Source: It's built with open-source libraries like llama.cpp and llama.swift, so the app is free and open-source.

LlamaChat is geared for people who want to use these AI models in a more fluid way. It's good for people who want to try out different LLaMA-based models without having to set up a lot of technical plumbing.

LlamaChat doesn't come with any prepackaged model files. You have to find and install the models yourself, and you'll have to follow the terms and conditions of the models' authors.

LlamaChat requires macOS 13 or later, so it'll work on newer machines. LlamaChat isn't affiliated with Meta Platforms, Inc., Leland Stanford Junior University or Nomic AI, Inc., but it does offer a way to explore AI models those companies have developed.

Check the LlamaChat site for details on getting started with the tool.

Published on June 11, 2024

Related Questions

Tool Suggestions

Analyzing LlamaChat...