Question: I'm looking for a way to import and use PyTorch model checkpoints on my Mac - do you know of any tools?

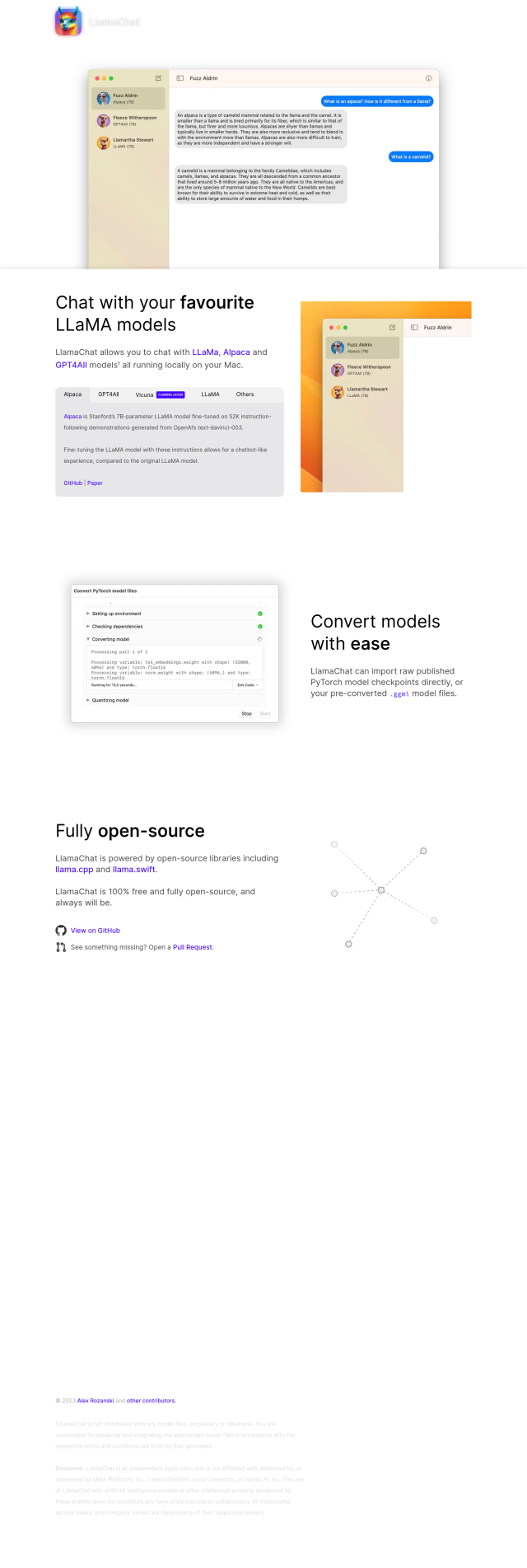

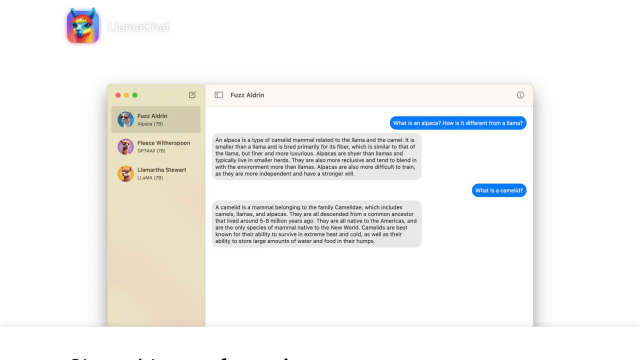

LlamaChat

If you're looking for a way to import and use PyTorch model checkpoints on your Mac, LlamaChat is an excellent choice. It is an open-source Mac app that allows you to chat with various LLaMA models, including Alpaca, GPT4All, and Vicuna. You can import your raw PyTorch model checkpoints or pre-converted .ggml model files, making it easy to add models to the app. LlamaChat runs on macOS 13 and is free and open-source, giving you the flexibility to use multiple LLaMA models in a local environment.

Airtrain AI

Another option to consider is Airtrain AI. Although it's more geared towards data teams handling big data pipelines, it offers an LLM Playground where you can fiddle with over 27 open-source and proprietary models. You can fine-tune these models to your specific needs using the platform's tools, making it a good choice if you're looking to evaluate and deploy custom AI models. Airtrain AI provides various pricing tiers, including a free starter plan, making it accessible for different budgets and requirements.

GradientJ

For a more comprehensive platform, GradientJ might be worth exploring. It is designed to help LLM teams build next-generation AI applications with tools for ideation, building, and managing LLM-native applications. GradientJ supports a wide range of use cases, including smart data extraction and chatbots, and provides an app-building canvas and team collaboration mechanisms. While it requires more setup and is broader in its scope, it can be a powerful tool if you're looking to develop sophisticated AI applications with multiple models and integrations.