Question: How can I run machine learning models on edge devices, web browsers, or mobile devices with minimal latency and high performance?

ONNX Runtime

For running machine learning models on edge devices, web browsers or mobile devices with low latency and high performance, ONNX Runtime is a great option. It can run inference on Windows, Linux, Mac, iOS, Android and web browsers, with hardware acceleration on CPU, GPU, NPU and more. Its modular design and broad hardware support make it adaptable, and it has multiple language APIs for easy integration. ONNX Runtime also supports generative AI and on-device training for better user privacy and customization, which is why it's widely used for many machine learning tasks.

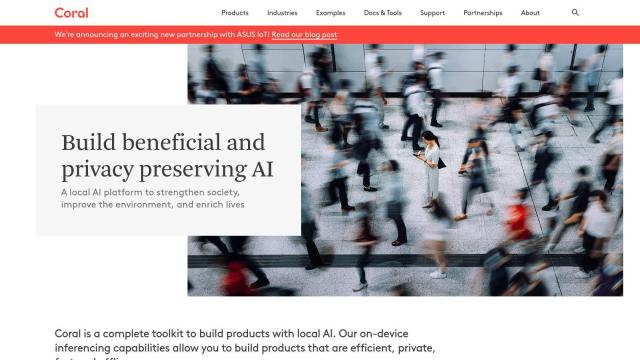

Coral

Another option is Coral, a local AI platform designed for fast, private and efficient AI across many industries. It can perform on-device inferencing with low power and high performance, and supports popular frameworks like TensorFlow Lite. Coral's products include development boards, accelerators and system-on-modules, so it can be used in a variety of applications like object detection, pose estimation and image segmentation. By running AI processing on the device, Coral can help break free of data privacy and latency issues.

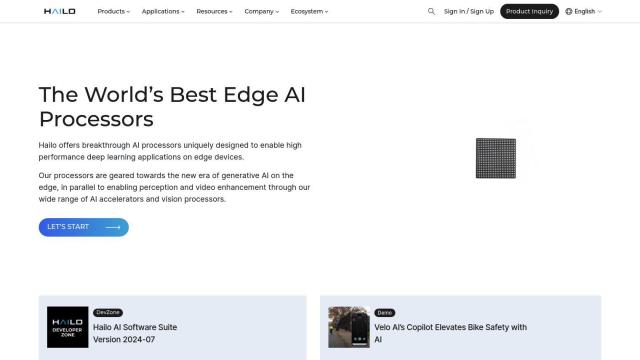

Hailo

For high-performance AI, Hailo offers custom processors for edge devices. Its product line includes AI Vision Processors and AI Accelerators that support deep learning workloads across automotive, retail and industrial automation industries. The products offer low latency and high accuracy, making them suitable for applications that need to process data efficiently and securely.

ZETIC.ai

Last, ZETIC.ai offers an on-device AI software that can run on mobile devices at a cost-effective price without requiring expensive GPU cloud servers. It's optimized for NPU-based hardware and supports a variety of operating systems and processors. With stronger user data privacy and lower maintenance costs, ZETIC.ai is a practical option for businesses looking to adopt AI without major infrastructure investments.