Question: Can you recommend a platform that allows me to use and customize large language models like Llama and Mistral on my local machine?

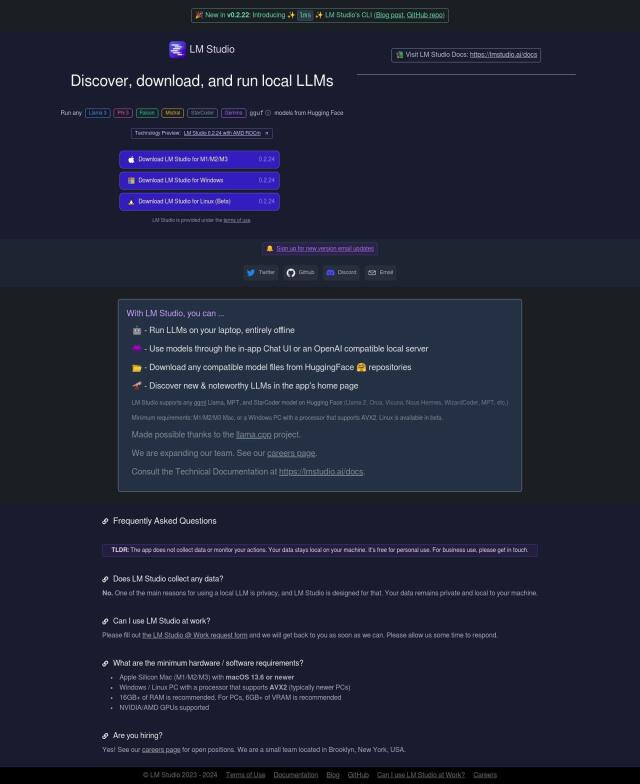

LM Studio

If you want a place to run and experiment with large language models like Llama and Mistral on your own machine, LM Studio is a good option. It's a cross-platform desktop app that lets you run and experiment with local and open-source LLMs in a graphical interface. It supports several models, including Llama 3, Phi 3, Falcon, Mistral and StarCoder. The app works offline and has a simple interface to configure and run inferences. It also comes with a command-line interface tool for managing and inspecting models, so it's good for automating and debugging workflows.

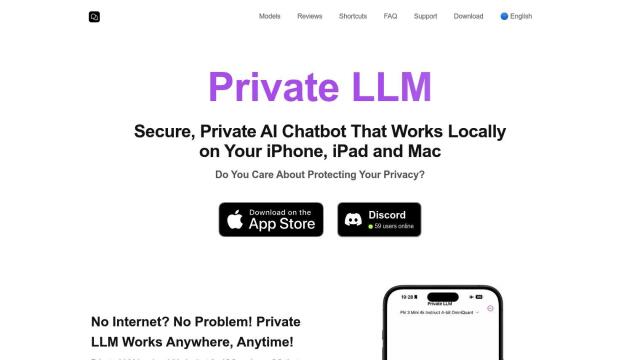

Private LLM

Another good option is Private LLM, a local AI chatbot for iOS and macOS. It supports a range of open-source LLM models, including Llama 3 and Mixtral 8x7B, that you can download to your device. The app is private and offline, with options like customizable system prompts, integration with Siri and Shortcuts, and AI services like grammar correction and text summarization. It also offers an evolving AI experience with continued support for new models and features.

AnythingLLM

If you want to use LLMs for business intelligence, AnythingLLM is a powerful option. It lets businesses process and analyze lots of documents with any LLM, including custom models like GPT-4 and open-source models like Llama and Mistral. The platform supports multi-user access, 100% privacy preserving data handling, and full control over LLMs. It works fully offline and offers a customizable developer API for a wide variety of document types.

Ollama

Finally, Ollama is an open-source information retrieval system that lets you run large language models like Llama 3, Phi 3 and Mistral on macOS, Linux and Windows. It offers model customization, cross-platform support, GPU acceleration and libraries to integrate with Python and JavaScript. Ollama is for developers, researchers and AI enthusiasts who want to experiment with large language models locally.