Question: I'm looking for a tool that allows me to experiment with large language models in a flexible and interactive environment.

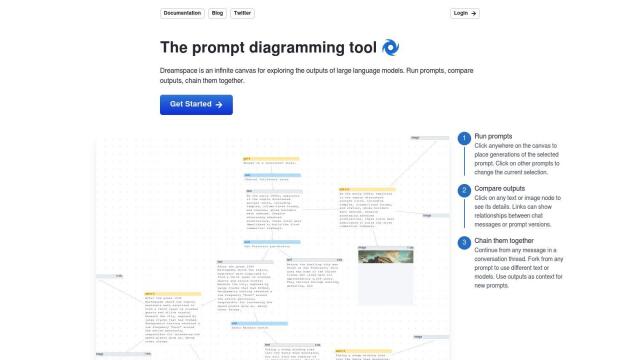

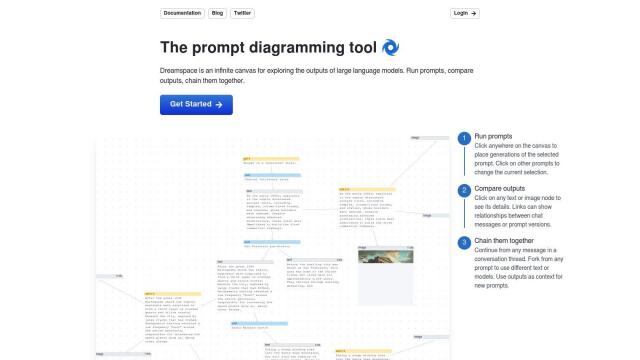

Dreamspace

If you want a tool for more interactive, experimental use of large language models, Dreamspace is a top contender. It's a canvas that lets you run prompts, compare results and chain them together. You can add and interact with generations of prompts, pick up a conversation from any message, and use different text or models to create new prompts. Dreamspace is packaged into "spaces" for multimodal projects and lets you switch among different AI models within a space.

Parea

Another good option is Parea, which is geared for AI teams that want to experiment and human annotate large language models. It's got an experiment tracker, observability tools and a prompt playground to try different prompts on large swaths of data. Parea integrates with common LLM providers and has lightweight Python and JavaScript SDKs for easy integration into your workflow. It's geared for teams that want to debug failures, monitor performance and gather user feedback on how well models are working.

Langfuse

If you prefer open-source options, Langfuse is a full-featured platform for debugging, analysis and iteration of LLM applications. It's got features like prompt versioning, score calculation for completions and full context capture of LLM executions. Langfuse can integrate with different SDKs and AI models, and it's good for hobbyists and enterprise teams with its tiered pricing.

Airtrain AI

Last, you could check out Airtrain AI, a no-code compute platform with an LLM Playground for trying out more than 27 open-source and proprietary models. It's also got tools to fine-tune models, AI Scoring for evaluating models, and a Community Support system. The idea is to make large language models accessible and affordable, so you can quickly try out, fine-tune and deploy custom AI models for your needs.