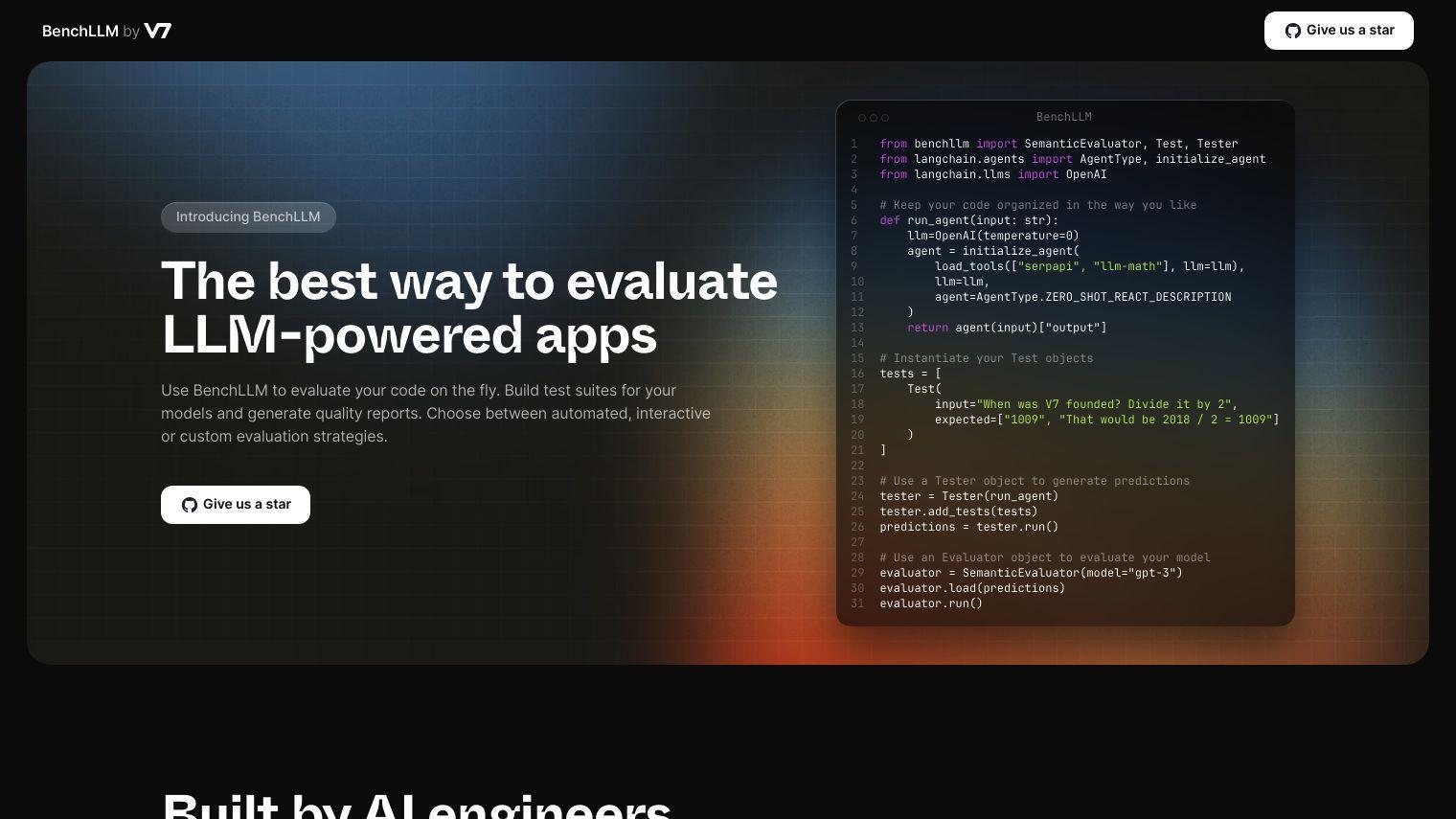

BenchLLM lets developers test LLM-powered apps on the fly by creating test suites for their models and producing quality reports. It offers automated, interactive or custom evaluation methods. The tool is designed to keep code organized with a flexible approach to testing and evaluation.

Among its features:

- Evaluation Flexibility: Supports automated, interactive, or custom evaluation strategies.

- Test Suite Management: Build and version test suites intuitively using JSON or YAML.

- API Integration: Works with OpenAI, Langchain and other APIs.

- Visualization: Generates insightful evaluation reports for easy sharing.

- Automation: Automates evaluations for seamless integration into CI/CD pipelines.

- Model Performance Monitoring: Detects performance regressions in production.

Developers using BenchLLM can get better testing and evaluation for their LLM-based projects. The tool is geared for people working on AI apps where performance is critical and consistent.

BenchLLM pricing isn't disclosed, so interested developers should check the project website.

Published on June 14, 2024

Related Questions

Can you recommend a tool that helps me test and evaluate my language model-based app's performance? I need a solution that automates testing and reporting for my AI-powered project, do you know of any? How can I ensure my LLM model is performing consistently in production, is there a tool for that? I'm looking for a way to create and manage test suites for my language model, can you suggest a platform?

Tool Suggestions

Analyzing BenchLLM...