Question: How can I ensure my AI application doesn't expose sensitive data, is there a tool that can help?

ClearGPT

To help you build an AI application that doesn't leak sensitive information, ClearGPT provides a secure, enterprise-grade foundation for internal use. It uses generative AI and Large Language Models (LLMs) like ChatGPT, but is designed to address security, performance, cost and data governance requirements. With features like role-based access, data governance and continuous fresh data, ClearGPT prevents data or IP leakage while integrating easily into your existing applications.

LangWatch

Another useful tool is LangWatch. It provides strong guardrails to ensure the quality and safety of generative AI solutions. LangWatch reduces risks like jailbreaking, sensitive data leakage and hallucinations and provides real-time metrics on conversion rates and output quality. This tool is great for developers and product managers who want to ensure high performance and safety in their AI applications.

Beagle Security

For a broader security approach, Beagle Security offers AI-powered penetration testing for web applications, APIs and GraphQL endpoints. It includes DAST, API security testing and compliance reporting. Scheduled and one-off tests are available, along with role-based access controls and integration with Jira and Azure Boards. Beagle Security offers detailed reports and remediation guidance to help you stay on top of application security vulnerabilities.

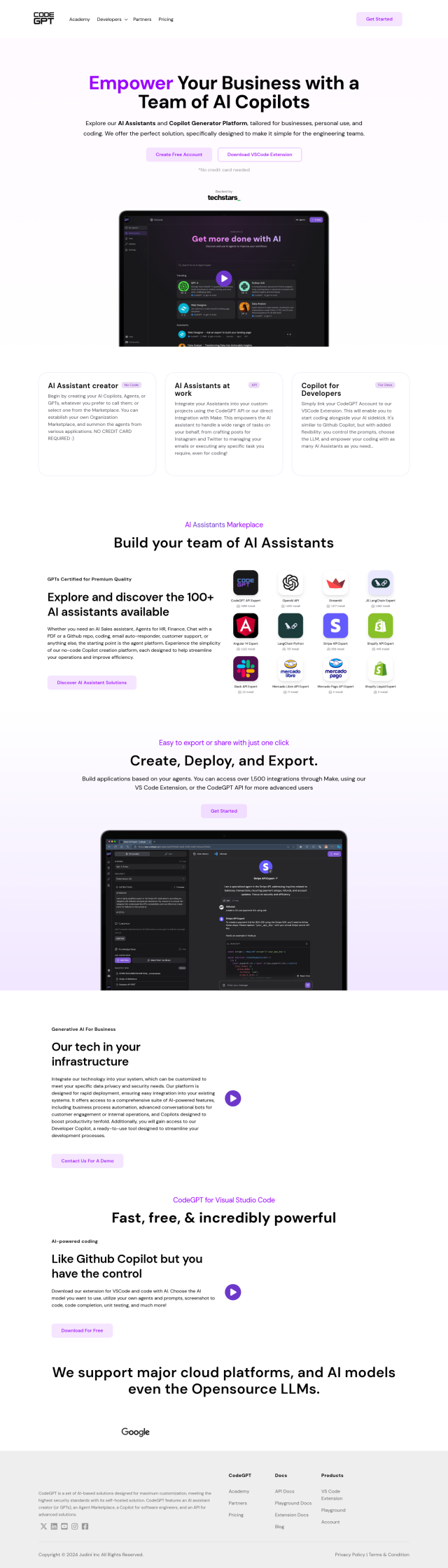

CodeGPT

If you need a coding assistant that puts data security first, CodeGPT is worth a look. It can help developers write code more efficiently and protect data security with self-hosted options for data privacy. It offers features like code completion, unit testing and screenshot-to-code, and CodeGPT integrates with IDEs like VSCode and offers access to more than 100 certified AI technical assistants. That makes it a good option for businesses and developers who want to speed up coding work without sacrificing data security and privacy.