Question: Is there a solution that allows me to run large language models and computer vision models in a private environment?

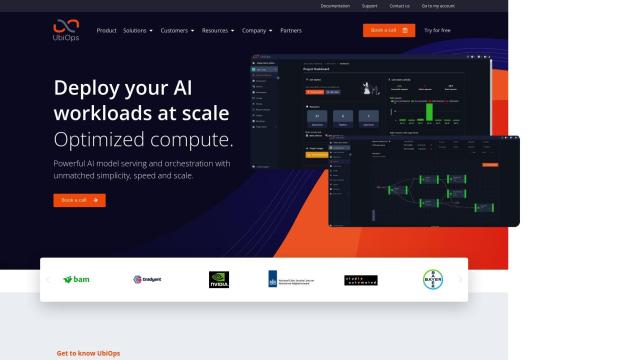

UbiOps

UbiOps is an AI infrastructure platform that simplifies the deployment of AI and machine learning workloads. It offers a private environment, strong security, and scalability, allowing you to run models on-premise or in private clouds. UbiOps supports integration with popular tools like PyTorch and TensorFlow, making it accessible for users with or without MLOps experience. It also provides features such as version control and pipelines, and offers a free trial and free plan.

Lamini

Another excellent option is Lamini. This platform is designed for enterprise teams to build, manage, and deploy their own Large Language Models (LLMs) on their data. Lamini supports deployment in air-gapped environments and offers high-throughput inference. It can be installed on-premise or on the cloud, making it versatile for different environments. The platform includes features like memory tuning and guaranteed JSON output, and provides a free tier with limited inference requests.

Numenta

For those who prefer to use CPUs instead of GPUs, Numenta offers a platform that efficiently runs large AI models on CPUs. It uses the NuPIC system to optimize performance and supports real-time performance optimization and multi-tenancy. Numenta is well-suited for industries like gaming and customer support, providing high performance and scalability without the need for expensive GPUs. It also allows for fine-tuning generative and non-generative LLMs on CPUs, ensuring data privacy and control.