Question: I'm looking for a tool that helps reduce the cost of LLM API calls without sacrificing performance.

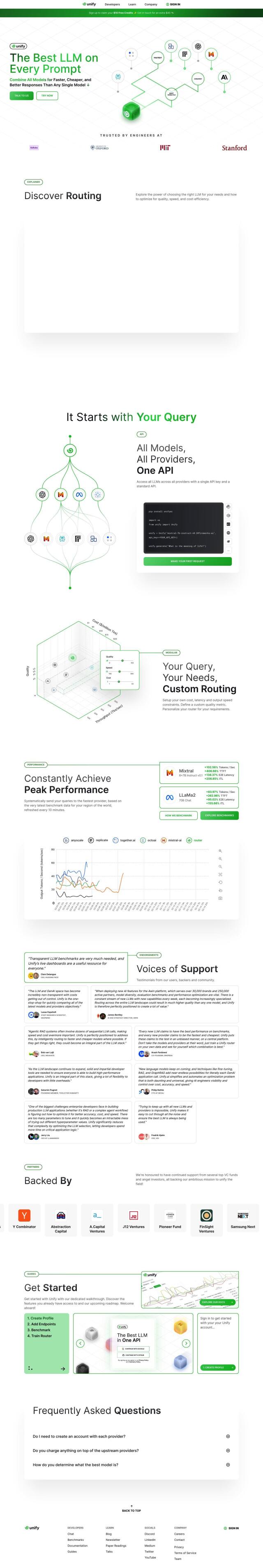

Unify

For cutting costs of LLM API calls without sacrificing performance, Unify is a great option. It provides a dynamic routing service that optimizes LLM applications by sending prompts to the best available endpoint across multiple providers with a single API key. This approach ensures the fastest possible selection of the best provider through live benchmarks updated every 10 minutes. Unify operates on a credits model, where you only pay what the endpoint providers charge, so it's economical and fast.

Freeplay

Another tool worth mentioning is Freeplay, which offers an end-to-end lifecycle management platform for LLM product development. It simplifies the development process with tools like prompt management, automated batch testing, and AI auto-evaluations. Freeplay lets teams prototype faster, test with confidence, and optimize products, which means big cost savings and faster development velocity, so it's well-suited for enterprise teams.

Predibase

Predibase also offers a cost-effective way to fine-tune and serve LLMs. It supports a broad range of models and offers serverless inference for up to 1 million tokens per day for free. Predibase charges on a pay-as-you-go basis depending on model size and dataset, so it's a good option for most developers who want to be flexible but not break the bank.

AIML API

Last, AIML API offers a single platform to access more than 100 AI models through a single API, without the hassle of setting up and maintaining servers. With a predictable pricing model based on token usage, AIML API offers fast, reliable and cost-effective access to a wide range of AI models. It's designed for high scalability and reliability, so it's well-suited for serious machine learning projects.