Question: Can you suggest an open-source solution for monitoring and optimizing the performance of large language models?

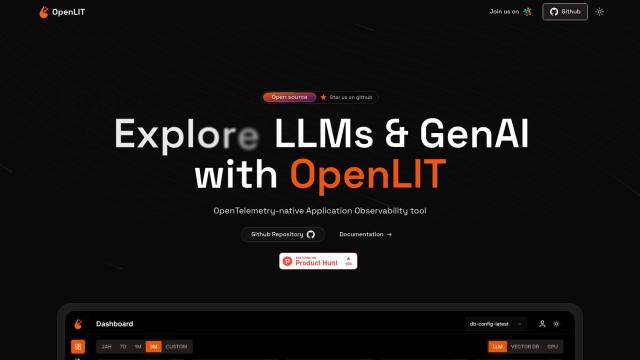

OpenLIT

If you need an open-source tool to monitor and optimize large language models, OpenLIT is worth a look. It uses OpenTelemetry to monitor and aggregate LLM app performance metrics, providing real-time data and an interactive UI for performance and cost visualization. It integrates with Datadog and Grafana Cloud for easy export and is geared for developers building GenAI and LLM apps.

Promptfoo

Another tool worth checking out is Promptfoo, a command-line interface (CLI) and library for evaluating and optimizing LLM output quality. It supports multiple LLM providers and lets you customize evaluation metrics. You can integrate it into existing processes with a command-line interface or use it as a Node.js library, making it useful for developers and teams.

Langfuse

For full LLM engineering, Langfuse offers a platform with tracing, prompt management, evaluation and analytics. It supports multiple SDKs and frameworks and provides insights into metrics like cost, latency and quality. Langfuse is certified for security and can be self-hosted for maximum flexibility.

Superpipe

Last, check out Superpipe if you want to optimize LLM pipelines. It lets you create, test and run pipelines on your own infrastructure to cut costs and improve results. With its Superpipe Studio, you can manage datasets, run experiments and monitor pipelines with detailed observability tools, making it a good option for experimentation and optimization.