Question: How can I integrate large language models into my enterprise search and insights platform?

LlamaIndex

If you want to build large language models into your enterprise search and insights system, LlamaIndex is a powerful option. It lets you integrate your own data sources with LLMs, supporting more than 160 data sources and 40 vector, document, graph and SQL database providers. LlamaIndex comes with tools for data ingestion, indexing and querying, and is good for financial services analysis, advanced document intelligence and enterprise search. It's got a free tier and more powerful enterprise options, too, and supports Python and TypeScript packages for easy integration.

Glean

Another good option is Glean, which uses Retrieval Augmented Generation (RAG) technology to produce answers tailored to your enterprise data. Glean builds a knowledge graph of all enterprise content, people and interactions, and offers no-code generative AI agents, assistants and chatbots. The platform is geared for engineering, support and sales teams to get more done by providing fast access to the right information and answers.

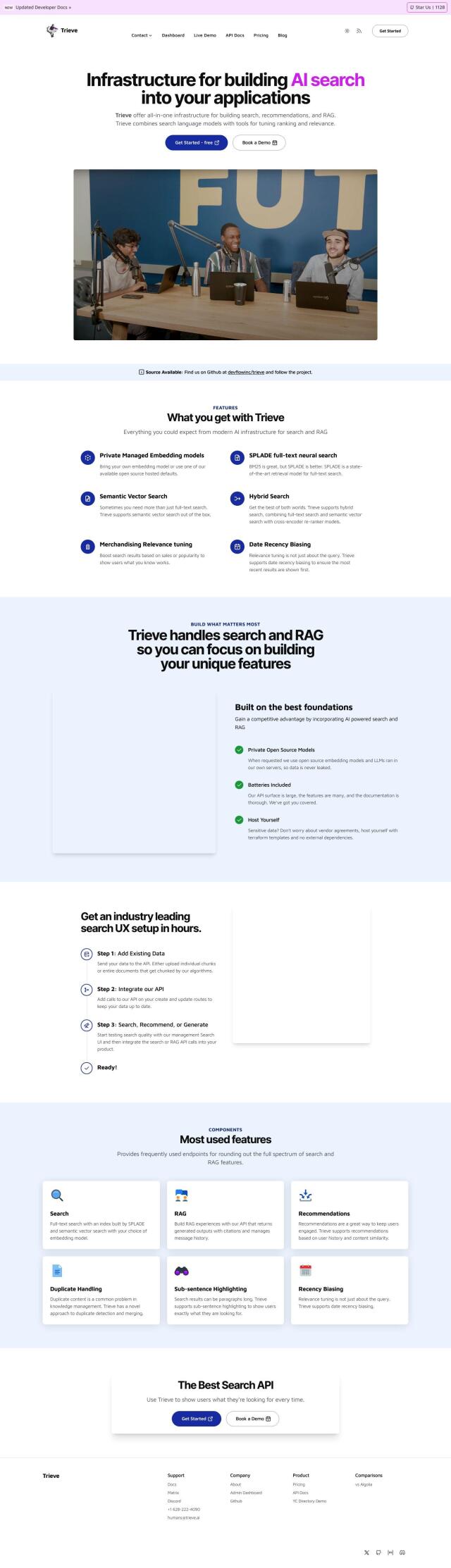

Trieve

If you want more advanced search, Trieve offers a full-stack foundation for building search, recommendations and RAG experiences. It includes private managed embedding models, semantic vector search and hybrid search tools. Trieve is good for use cases that need semantic search and re-ranker models, and it can be hosted flexibly with terraform templates. It's got a free tier and several paid tiers for different needs.

ClearGPT

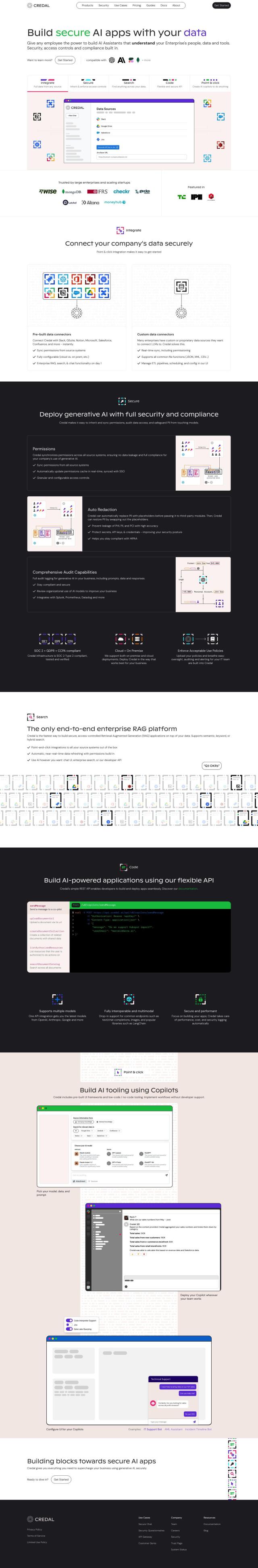

ClearGPT is another customizable and secure platform for internal enterprise use. It offers the highest model performance and has features like role-based access, data governance and a human reinforcement feedback loop. ClearGPT protects data and IP while letting data-science teams use the latest models without vendor lock-ins. It's good for automating and increasing productivity across different business units.