Question: I'm looking for a deep learning framework that supports multiple neural network architectures and is easy to understand and debug.

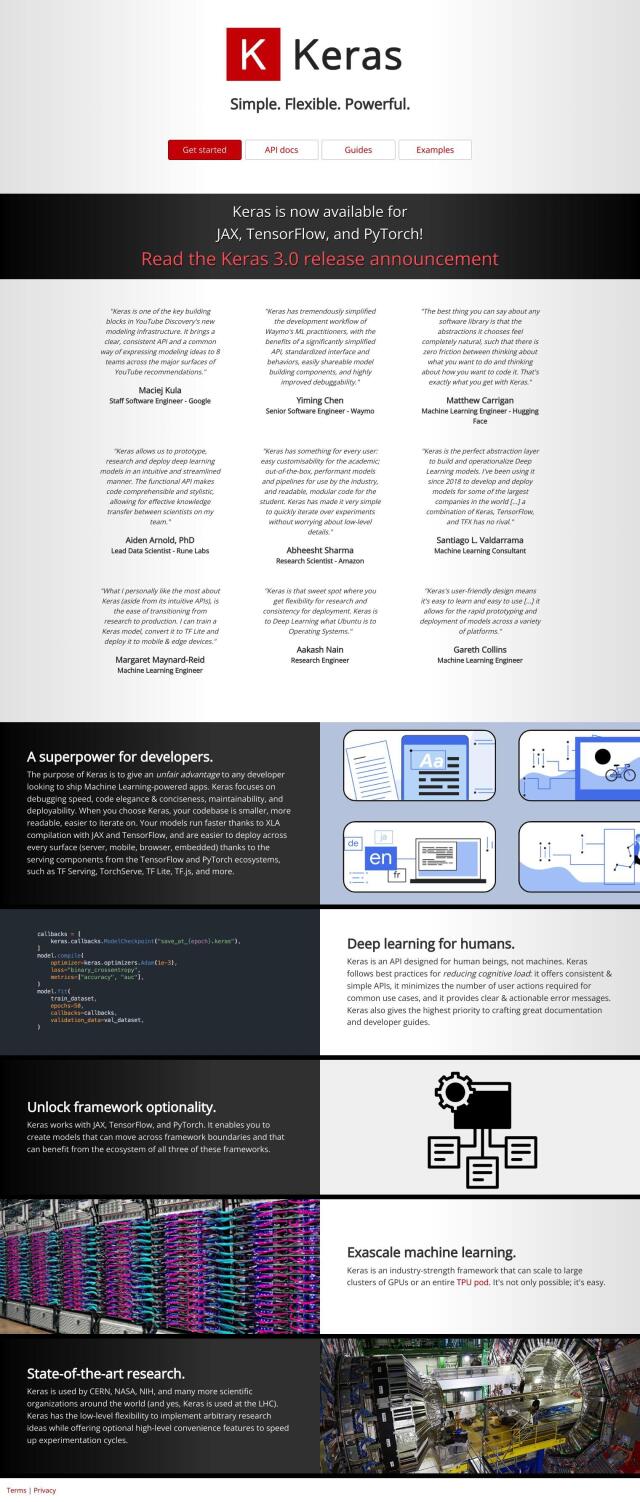

Keras

Keras is a deep learning API that's designed to be fast to code and fast to debug. It's got a consistent and easy-to-use API that reduces cognitive load and offers helpful error messages. Keras supports several backend frameworks, including TensorFlow, PyTorch and JAX, so it's very scalable and a good choice for big industrial applications. It's also got good documentation and tutorials with 150+ code examples, so it's good for developers of all skill levels.

PyTorch

Another top contender is PyTorch, which is also highly flexible and easy to use. It's got a friendly front-end, distributed training abilities and a thriving ecosystem of tools and libraries. PyTorch can switch between eager and graph modes with TorchScript, which means it's good for a variety of tasks including computer vision and natural language processing. It's also got support for model interpretability, and it's got good documentation and community resources.

TensorFlow

TensorFlow provides a flexible environment for building and running machine learning models. It's got high-level APIs like Keras for building models, eager execution for rapid iteration and debugging, and distributed training with the Distribution Strategy API. TensorFlow is good for a broad range of tasks and is widely used in tech, health care and education. It's got a lot of resources, including interactive code samples and tutorials, so it's good for beginners and experts.