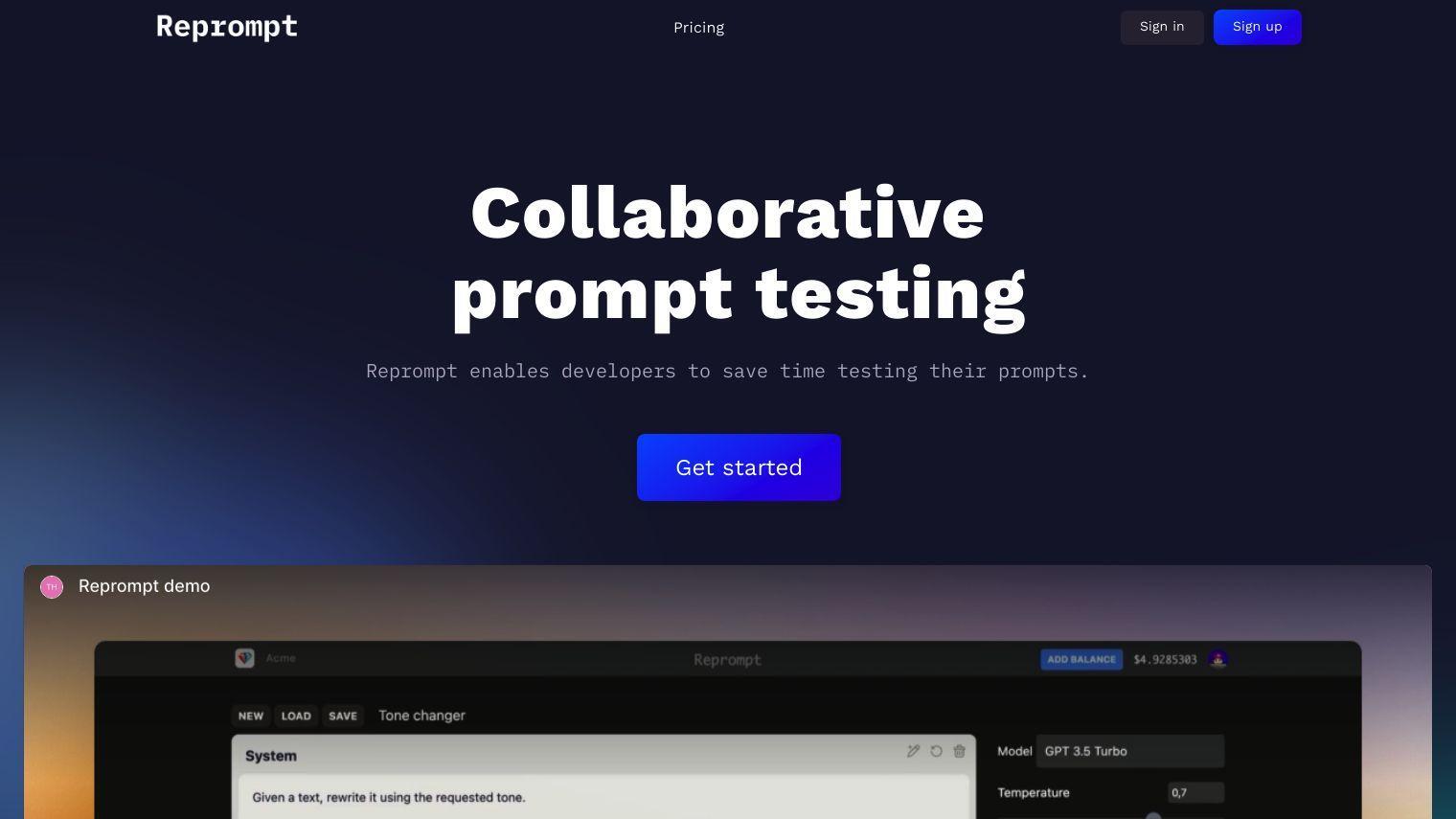

Reprompt is designed to make prompt testing easier for developers building AI applications. By producing a range of responses and analyzing errors, developers can optimize their large language model (LLM) apps more quickly. The service cuts down on the time spent making data-driven prompt decisions, processing more data and spotting problems.

Among its features are:

- Multi-Scenario Testing: Run multiple scenarios at the same time to speed up debugging.

- Anomaly Detection: Spot errors and other problems with your prompts.

- Version Comparison: Compare with previous versions to ensure you have confidence in changes.

Reprompt is protected by enterprise-grade security, including 256-bit AES encryption and other protections.

Pricing is based on a credit system, with different costs depending on the model. For example, the gpt4 8k model costs $0.045 per 1,000 tokens for prompts and $0.09 per 1,000 tokens for completions. Other models include gpt-3.5-turbo, Ada, Babbage, Curie and Davinci, with prices ranging from $0.0006 to $0.030 per 1,000 tokens.

Reprompt is geared for developers and teams that want to automate their AI prompt testing. That should speed up results and make them more accurate. By providing a reliable and secure foundation, Reprompt hopes to make prompt optimization more efficient and less worrisome. Check out the company's website to learn more and try out your prompts.

Published on June 14, 2024

Related Questions

Tool Suggestions

Analyzing Reprompt...