Question: Can you recommend a tool that helps optimize large language model apps by testing prompts and identifying errors?

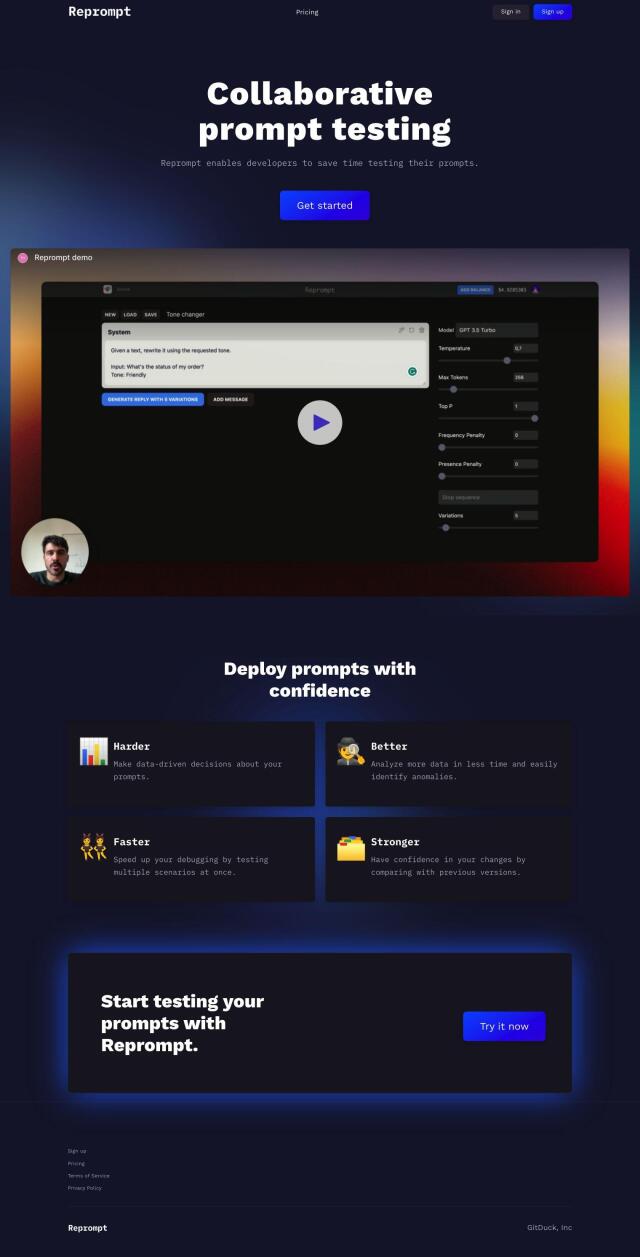

Reprompt

If you need a tool to optimize large language model (LLM) apps by trying out prompts and figuring out where things go wrong, Reprompt could be a good option. Reprompt cuts down developers' prompt testing work by producing lots of responses, analyzing errors and flagging up weirdness. It also can perform multi-scenario testing, anomaly detection and version comparison so you can be sure your LLM apps are reliable. The service charges on a credit system with prices starting at $0.0006 per 1,000 tokens.

PROMPTMETHEUS

Another more elaborate option is PROMPTMETHEUS, a one-stop shop for writing, testing, optimizing and deploying prompts on more than 80 LLMs from different companies. PROMPTMETHEUS includes a prompt toolbox, performance testing and a mechanism for deploying prompts to custom endpoints. It also integrates with services like Notion, Zapier and Airtable. The service has a variety of pricing levels, including a free option for casual use and more expensive options for teams and enterprises.

Langtail

If you prefer a no-code approach, Langtail offers a collection of tools for debugging, testing and deploying LLM prompts. It includes abilities like fine-tuning prompts with variables, running tests to avoid surprises, and deploying prompts as API endpoints. Langtail also comes with a no-code playground and verbose logging to help you build and test AI apps. The service is available in a free tier for small businesses and a Pro tier costing $99 per month.

Humanloop

Last, Humanloop is geared for overseeing and optimizing the development of LLM applications. It helps you sidestep problems like inefficient workflows and manual evaluation with its collaborative prompt management system, version control and evaluation suite for debugging and performance monitoring. Humanloop supports several LLM providers and offers Python and TypeScript SDKs for integration. It's geared for product teams and developers who want to increase efficiency and collaboration in AI feature development.