Question: Is there a platform that offers multi-scenario testing for AI prompt development to speed up debugging?

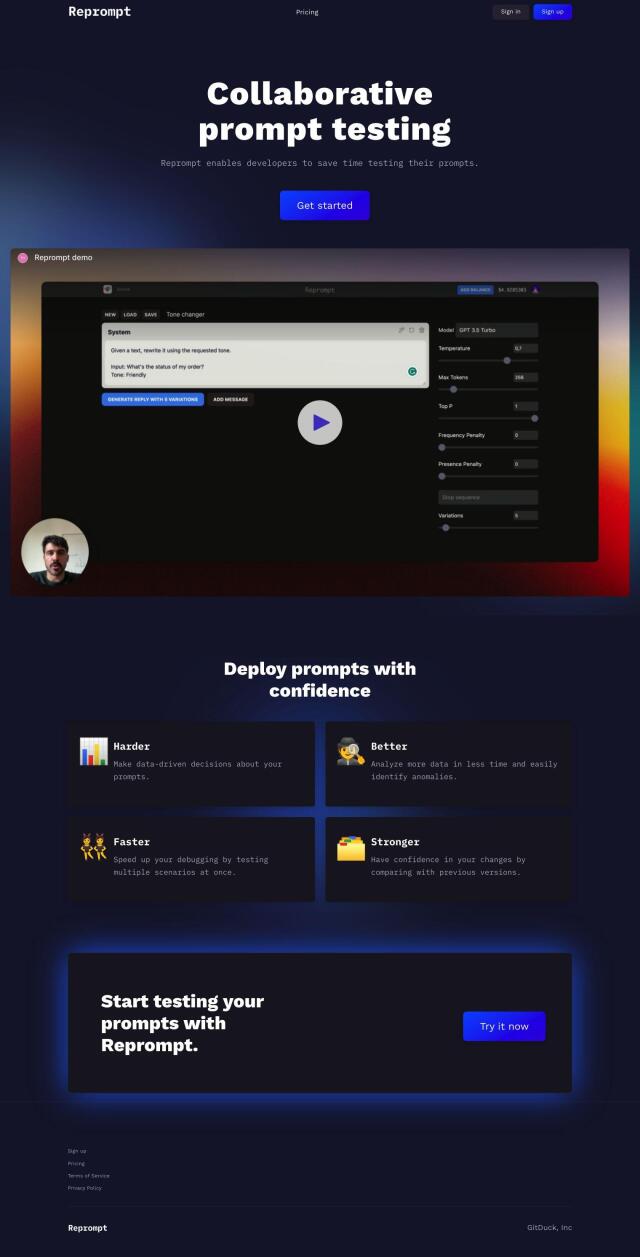

Reprompt

If you want a platform to run multi-scenario tests for AI prompt development and to debug more easily, Reprompt is a good choice. It automates prompt testing for developers, showing multiple responses, diagnosing errors and flagging anomalies. The service can run multi-scenario tests and has strong security with 256-bit AES encryption. It's pay by the credit, so costs vary depending on the model you're using.

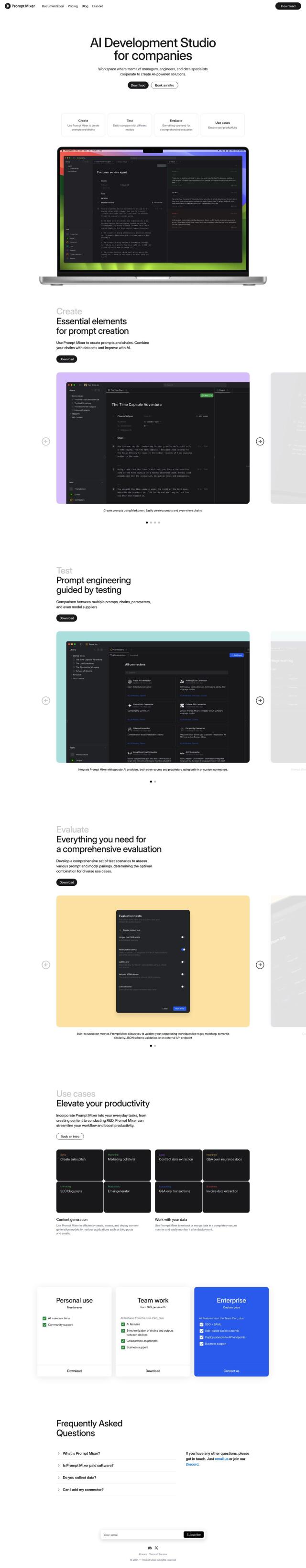

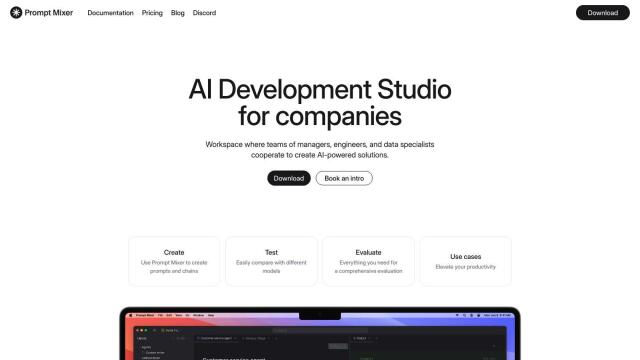

Prompt Mixer

Another good option is Prompt Mixer, a collaborative environment for building AI features. It lets managers, engineers and data scientists work together on AI solutions, including building and testing prompts. The service has features like automated version control, AI suggestions and the ability to run custom JavaScript or Python code. It can use multiple AI providers and offers detailed evaluation metrics, so it's adaptable to a wide range of use cases.

Langtail

If you want logging and parameters you can tweak, Langtail is a full featured suite of tools for debugging, testing and deploying LLM prompts. It's got a no-code playground for writing and running prompts, and its adjustable parameters and test suites help you ensure you're building reliable AI products. Langtail offers multiple pricing levels, including a free tier for small businesses and solopreneurs.

HoneyHive

Last, HoneyHive is a powerful service for evaluating, testing and observing AI services. It's got features like automated CI testing, observability with production pipeline monitoring and dataset curation. HoneyHive can use a wide range of models through integrations with common GPU clouds, and it's got a free developer plan for individuals and researchers, so it's good for small teams or researchers.