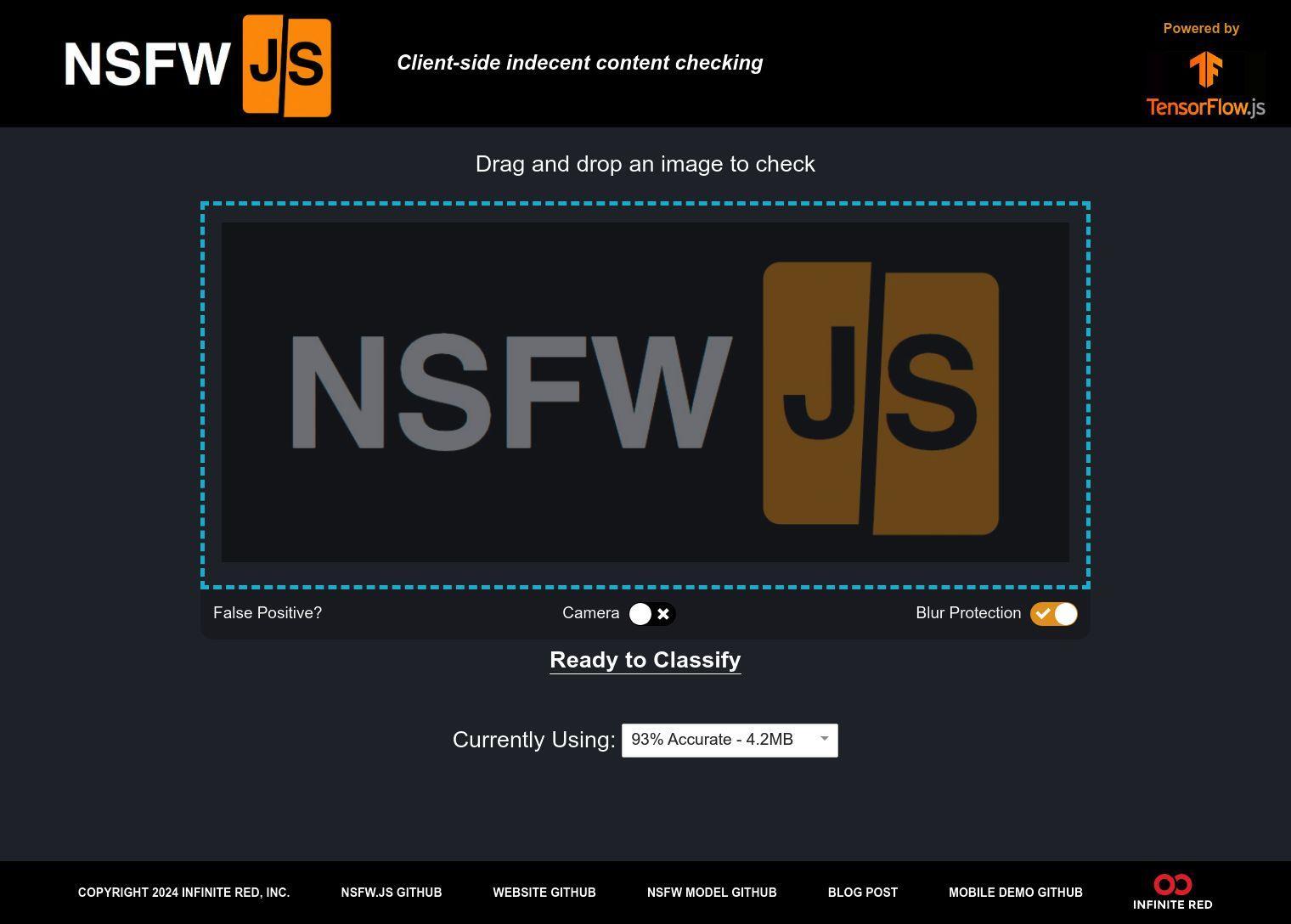

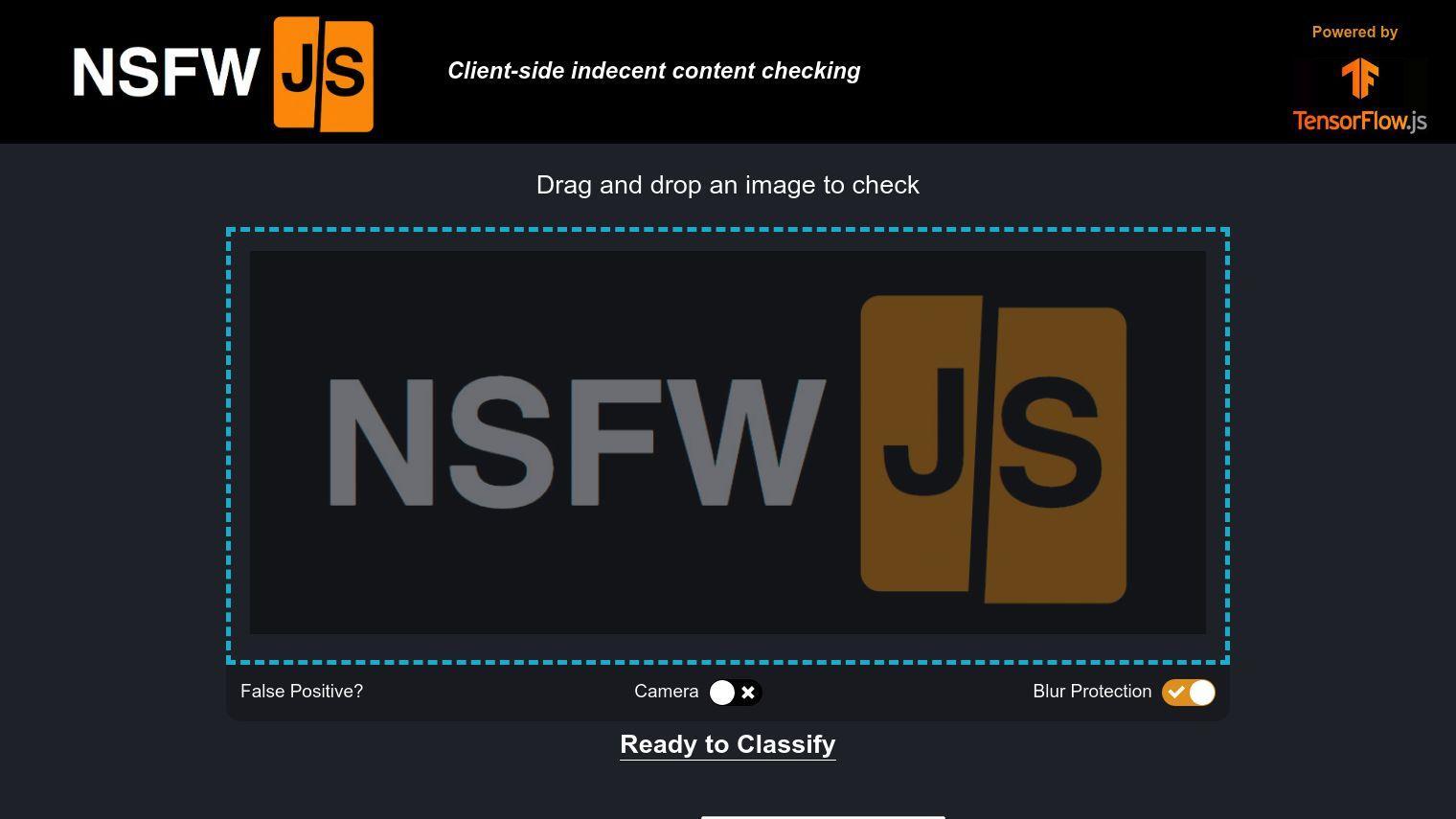

NSFW JS is a JavaScript library that can spot inappropriate images right in the browser of the person viewing them. It's designed to help people quickly spot and screen out unappealing content without having to rely on servers.

NSFW JS offers client-side checks for indecent content, a move that can protect privacy since it doesn't require sending images to a server. The library uses a model that's being improved all the time, but for now, it's got a 93% accuracy rate with a 4.2MB model size.

Some of its features include:

- Camera: Can take input from a camera for image analysis

- Blur Protection: Can apply a blur to protect against image analysis

Developers can use NSFW JS in any situation where they need to moderate content. That could be particularly useful for sites that rely on user-generated content and want to keep things family-friendly.

There's no pricing information yet, but you can try out demos and check the library's GitHub repositories to see how it works and how well it works. If you want to incorporate it into your own project, you can check the website for details on how to use it and what it's good for.

Published on June 13, 2024

Related Questions

Tool Suggestions

Analyzing NSFW JS...