Question: I'm looking for a way to protect user privacy while still moderating content, do you know of a client-side solution that can do this?

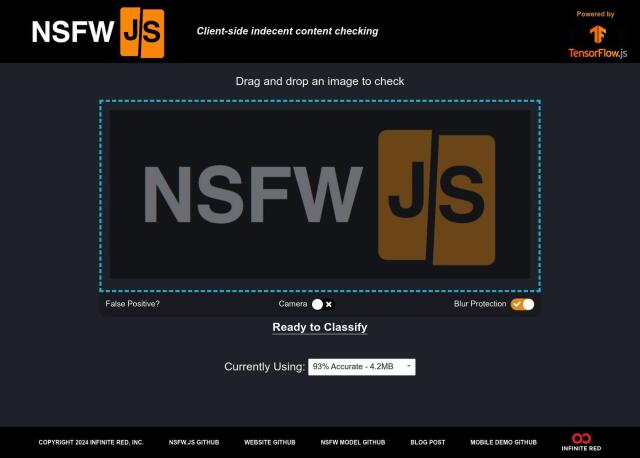

NSFW JS

If you need a client-side approach to protect privacy while moderating content, NSFW JS could be just the ticket. This JavaScript library runs an indecency detection model directly in a browser so your server doesn't see potentially embarrassing photos. The model can take input from a camera and protect against blurring, and the team updates it frequently to keep it accurate.

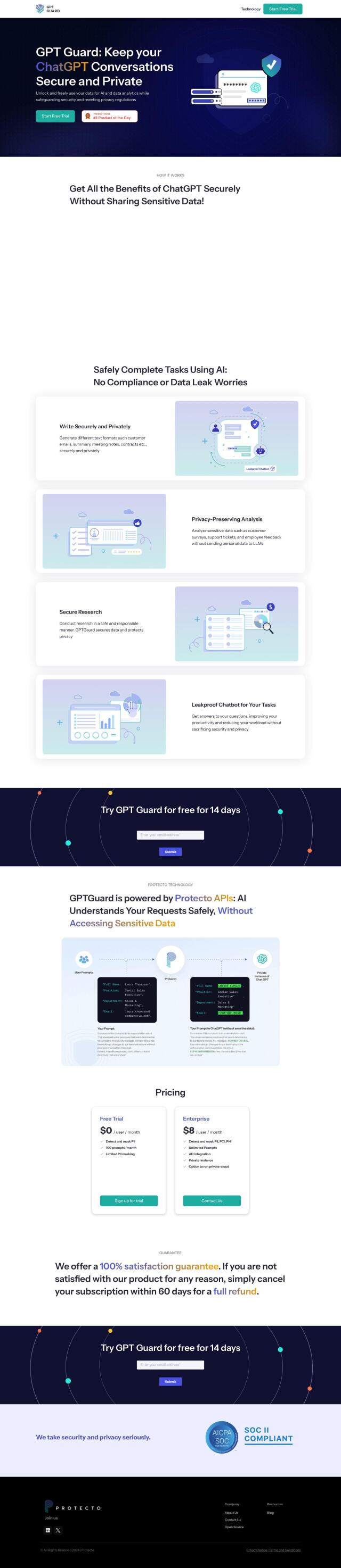

GPT Guard

Another option is GPT Guard for protecting privacy when using ChatGPT. It's designed to comply with privacy regulations by generating text securely and analyzing data in a privacy-preserving way. That's useful for generating emails and contracts that are sensitive but not necessarily confidential, and you can try it out with a free trial.

NuMind

If you want something more flexible, NuMind is a machine learning platform where you can build your own text processing models without writing code. NuMind offers privacy protection and a variety of use cases, including content moderation, sentiment analysis and entity recognition. It's also very data efficient and significantly cheaper than GPT4, so it could be a good choice for many tasks.