Question: Can you recommend a tool that can analyze images from a camera feed and detect indecent content?

Imagga

For detecting inappropriate content from a camera stream, Imagga provides a broad image recognition API that includes content moderation, visual search and facial recognition. The API can automatically classify images, generate thumbnails and extract colors. With machine learning that can be trained for custom categories, Imagga can be used in many industries and supports multiple integration options, including cloud, on-premise and edge/mobile.

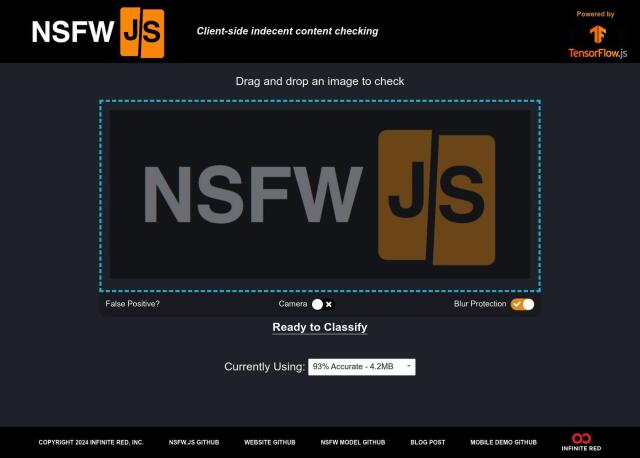

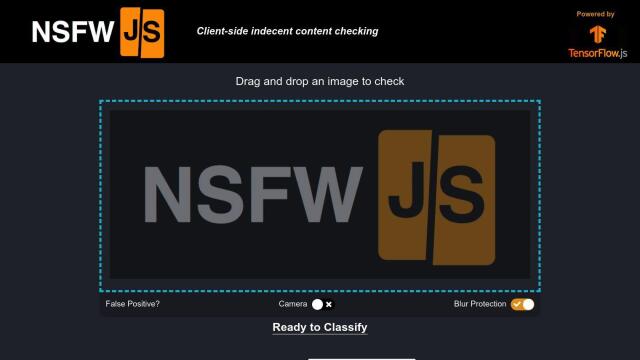

NSFW JS

Another contender is NSFW JS, a JavaScript library that can run indecency detection models in web browsers. NSFW JS has high accuracy and preserves privacy, with support for camera input and blurring to prevent image obfuscation. It's geared for developers building apps that need content moderation to keep things family friendly.