Question: I'm looking for a platform that can speed up ML model deployment and reduce infrastructure costs.

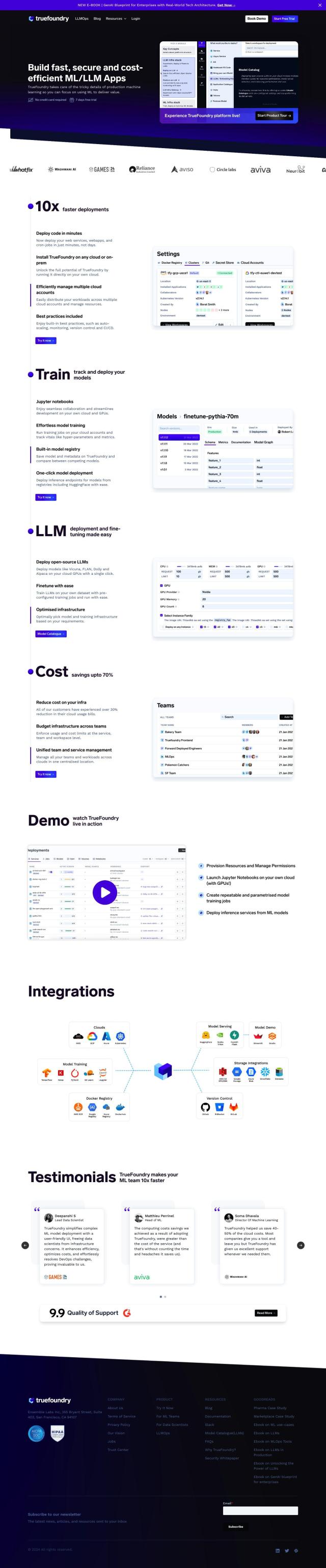

TrueFoundry

If you're looking for a platform to accelerate ML model deployment and lower infrastructure costs, TrueFoundry is a good choice. It speeds up ML and LLM development and deployment so you can get to market faster and save up to 30-40% on costs. With features like one-click model deployment, cost optimization, and support for both cloud and on-premise environments, TrueFoundry empowers data scientists and engineers to focus on delivering value without worrying about infrastructure.

Together

Another good option is Together, a cloud platform for fast and efficient development and deployment of generative AI models. It's a cost-effective way to get to enterprise scale with optimizations like Cocktail SGD and FlashAttention 2, and scalable inference to handle high traffic. The platform supports many AI tasks and models, and offers big cost savings, up to 117x compared to AWS and 4x compared to other suppliers.

MLflow

MLflow is an open-source, end-to-end MLOps platform that simplifies the development and deployment of machine learning applications. It's a single environment for managing the entire lifecycle of ML projects, including experiment tracking, model management, and support for popular deep learning libraries. MLflow's free and open-source nature makes it a good option for improving collaboration, transparency and efficiency in ML workflows.

Anyscale

For a platform that supports both traditional and generative AI models, check out Anyscale. Built on the open-source Ray framework, Anyscale offers the highest performance and efficiency with features like workload scheduling, smart instance management, and GPU and CPU fractioning. It supports integrations with popular IDEs and offers a free tier with flexible pricing, making it a good option for large-scale AI applications with big cost savings.