Question: I need a search solution that can process thousands of results automatically, do you know of any options?

Exa

Exa is a powerful search engine that uses embeddings and transformer-based models to deliver contextually relevant results. It accepts natural language queries and can automatically process vast amounts of data, making it a good choice for developers who want to use Large Language Models to create high-quality web content. Exa offers a variety of pricing plans, including a free tier, and can perform similarity searches by crawling and parsing content from URLs.

Vespa

Vespa is another good option, particularly if you have large data sets. It combines vector search, lexical search and search in structured data into one platform. That means developers can build search applications that scale and incorporate AI models without a lot of extra work. With its auto-elastic data management and machine-learned model inference, Vespa is designed to perform well and respond with low latency, and it's good for a wide range of use cases.

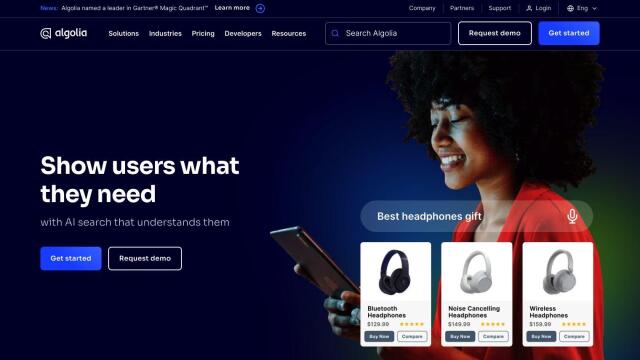

Algolia

If you want to personalize search results, Algolia provides AI-powered search infrastructure that marries keyword search with vector understanding. It can dynamically re-rank and personalize based on user behavior, so it's good for a wide range of industries. Algolia offers flexible pricing, including a Pay-As-You-Go option, and detailed documentation to make it easy to integrate.

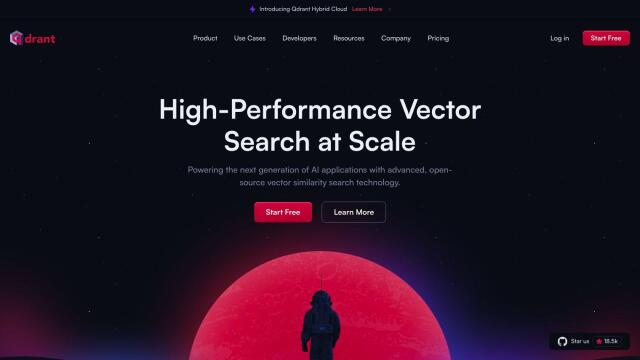

Qdrant

Last, Qdrant is an open-source vector database and search engine that's good for fast and scalable vector similarity searches. It's designed for cloud-native architecture and is optimized for high-performance processing of high-dimensional vectors. Qdrant can be deployed in a variety of ways and integrates with popular embeddings and frameworks, making it a good option for advanced search and recommendation systems.