Question: How can I find a Python library that provides access to state-of-the-art text and image embedding models?

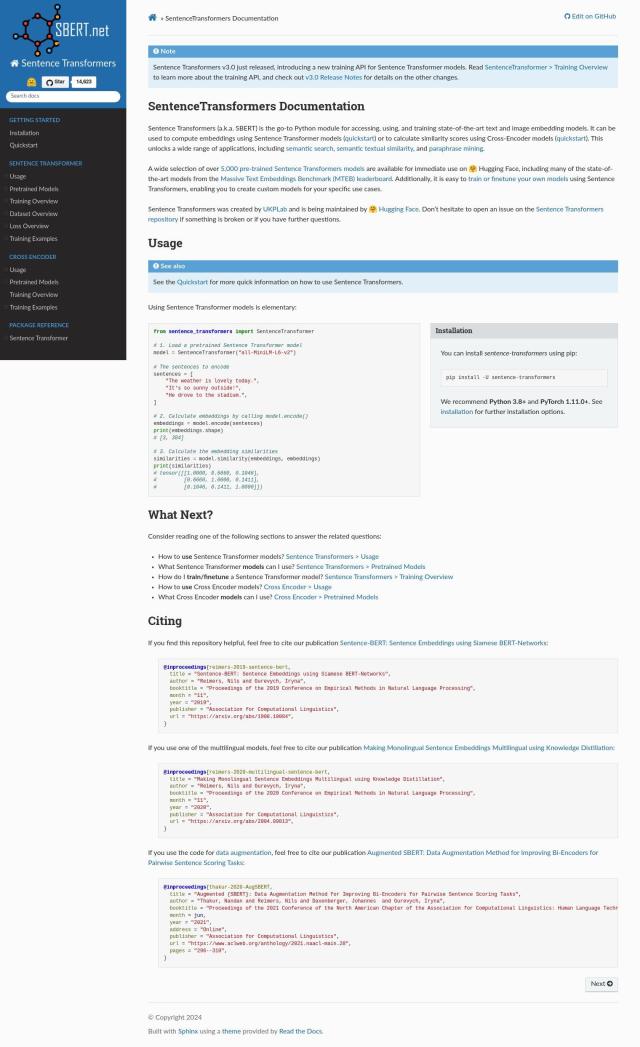

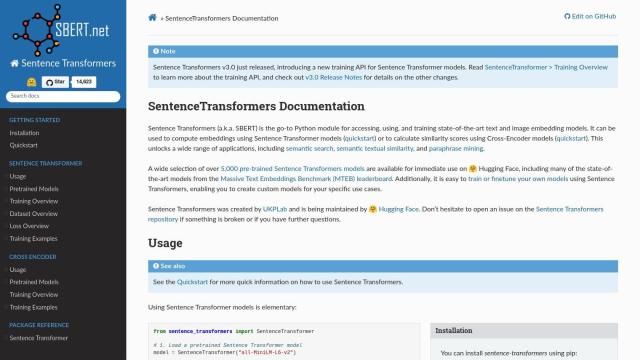

Sentence Transformers

If you're looking for a Python library that offers access to state-of-the-art text and image embedding models, Sentence Transformers is a great option. The library offers more than 5,000 pre-trained models, including those on the Massive Text Embeddings Benchmark (MTEB) leaderboard, and supports tasks like semantic search, semantic textual similarity and paraphrase mining. It also lets you train or fine-tune your own models, so it's a good all-purpose tool for natural language processing tasks.

Jina

Another great option is Jina, which is geared for multimodal data and offers more advanced features like multimodal and bilingual embeddings, rerankers, LLM-readers and prompt optimizers. Jina supports more than 100 languages and has features like auto fine-tuning for embeddings and open-source projects for managing multimodal data structures. Its support for multiple types of data makes it adaptable to a wide range of tasks.

deepset

If you want to use large language models in business applications, deepset has a powerful cloud platform and open-source Haystack framework. It supports a broad range of use cases, including Retrieval Augmented Generation, Conversational BI and Vector-Based Search, and offers pre-built templates and tools for rapid prototyping and deployment. It's geared for those who want to use LLMs for enterprise-level applications.

spaCy

Last, spaCy is a mature library for Natural Language Processing that supports more than 75 languages and 84 trained pipelines. It's got high-performance text processing abilities like named entity recognition, part-of-speech tagging and word vector computation. SpaCy is geared for large-scale information extraction tasks and can be easily integrated with transformers like BERT, so it's a good option for any NLP task.