Question: Can you recommend a tool that helps optimize machine learning models for on-device performance and takes advantage of specific hardware acceleration?

ONNX Runtime

For optimizing machine learning models for on-device performance and taking advantage of specific hardware acceleration, ONNX Runtime is a great option. It can run code across multiple platforms and devices, including CPU, GPU, NPU and other accelerators. With multilanguage APIs and support for a wide range of hardware, ONNX Runtime speeds up machine learning work, and it's a good foundation for on-device training and inference to protect user privacy and personalize devices.

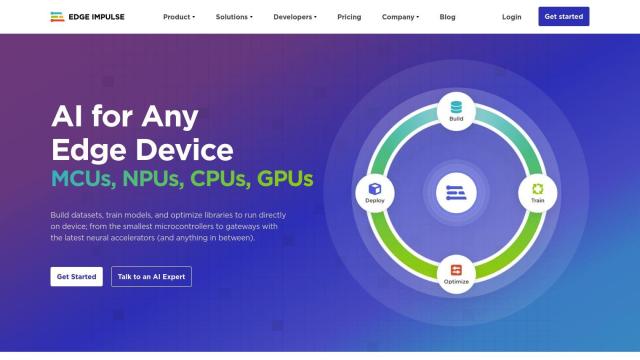

Edge Impulse

Another good option is Edge Impulse, which is geared for developing and running AI models directly on edge devices like MCUs, NPUs, CPUs, GPUs and sensors. The platform includes tools for data collection, model optimization and anomaly detection to help developers accelerate AI development and deployment on edge devices. It's integrated with a variety of ecosystems, so it can be used in a wide range of applications.

Coral

Coral is another option, particularly for industries that need AI processing that's fast, private and efficient. Coral offers on-device inferencing and supports common AI frameworks like TensorFlow Lite. Its products include development boards, accelerators and system-on-modules with balanced power and performance for tasks like object detection, pose estimation and more. It's a good option for addressing data privacy and latency concerns.

ZETIC.ai

For companies that want to build AI into mobile devices at low cost and high performance, ZETIC.ai is a good option. It runs on NPU-based hardware and includes an on-device AI runtime library that offers a fast and secure way to run AI without relying on cloud servers. The platform works with any operating system and processor, so it can be used in a wide range of devices.