Question: I need a way to integrate large language models into my project using Python or JavaScript, can you suggest a suitable library or framework?

LlamaIndex

LlamaIndex is a general-purpose data framework that lets you plug your own data sources into large language models. It supports more than 160 data sources and 40 vector, document and SQL database providers, so it's a good option for a wide range of use cases. With packages for both Python and TypeScript, it makes it easy to get started and includes tools for data loading, indexing, querying and performance testing. It's good for use cases like financial services, smart document processing and conversational interfaces.

Langfuse

Langfuse is another powerful foundation for LLM engineering. It includes tools for debugging, analysis and iteration like tracing, prompt management, evaluation and analytics. It can integrate with services like OpenAI and Langchain, and it captures the full context of LLM invocations. Langfuse also has security certifications and multiple pricing tiers, so it's good for developers and enterprise teams.

Ollama

If you want something simpler, Ollama is an open-source information retrieval system that lets you use a variety of large language models on macOS, Linux and Windows. It comes with a model library that includes uncensored models and models tuned for specific tasks, and it has Python and JavaScript libraries for integration. Ollama is good for developers, researchers and AI curious people who want to try out AI locally.

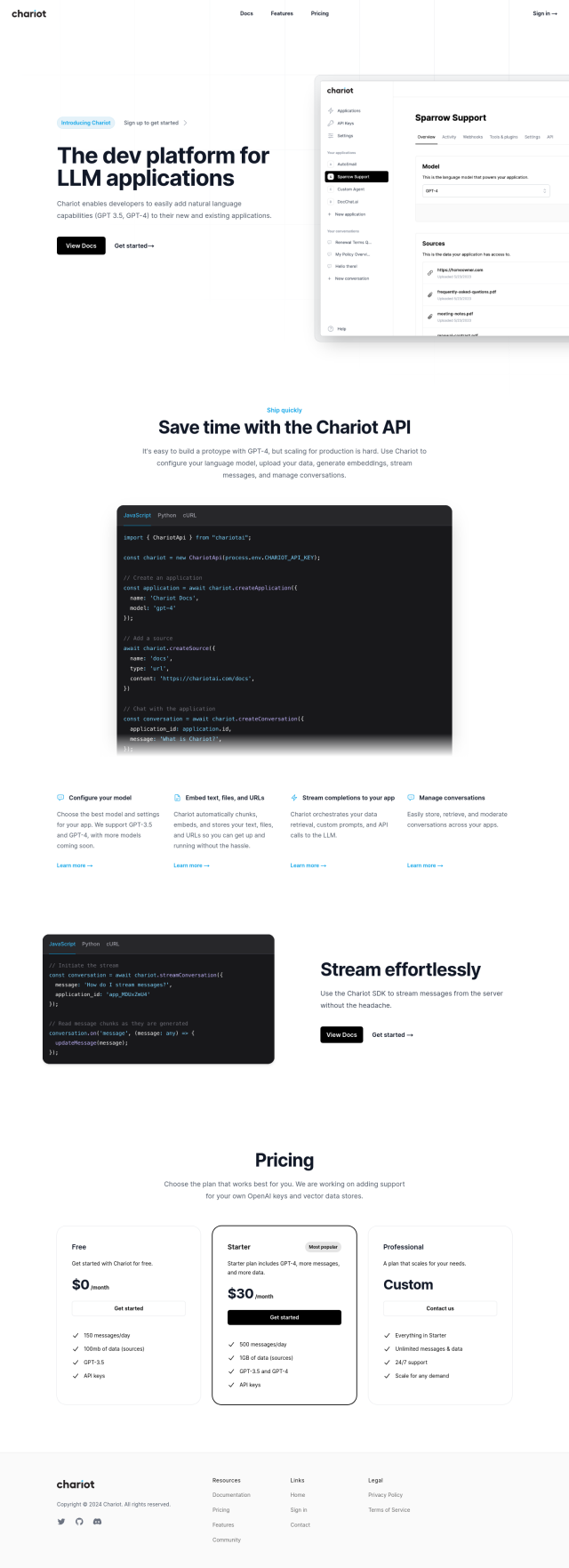

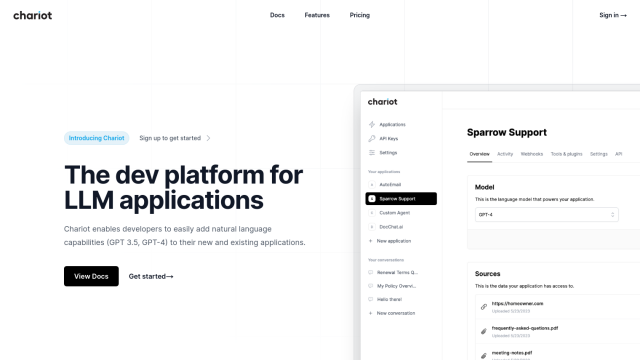

Chariot

Last, Chariot makes it easier to add natural language abilities to your apps. It supports GPT-3.5 and GPT-4, and it has tools for configuring models, managing conversations and generating text embeddings. Chariot is available as an SDK for Node.js, with Python and .NET SDKs coming soon, so it's good for a broad range of development needs.