Question: I need a flexible and scalable AI database that allows for easy retrieval and filtering of data, what options do I have?

Vespa

Vespa is a mature platform that lets developers build production-ready search applications at any scale. It's got a range of search options, including fast vector search, lexical search, and structured data search, combined with machine-learned models. It's designed for scalable and efficient machine-learned model inference and integrates with a variety of machine learning frameworks. It also has auto-elastic data management for high end-to-end performance and low latency.

Pinecone

Pinecone is another strong option, with a serverless design geared for fast querying and retrieval of similar matches across billions of items. It's got low-latency vector search, metadata filtering, real-time updates and hybrid search. With an average query latency of 51ms and 96% recall, Pinecone is highly scalable and economical, and suitable for many use cases, including real-time scenarios.

Milvus

Milvus is an open-source vector database for high-dimensional vector search. It's got a range of deployment options from the lightweight Milvus Lite for prototyping to the Milvus Distributed for high-scale performance. Milvus supports metadata filtering, hybrid search and multi-vector support, with elastic scaling up to tens of billions of vectors. It's well-suited for GenAI use cases like image search and recommender systems.

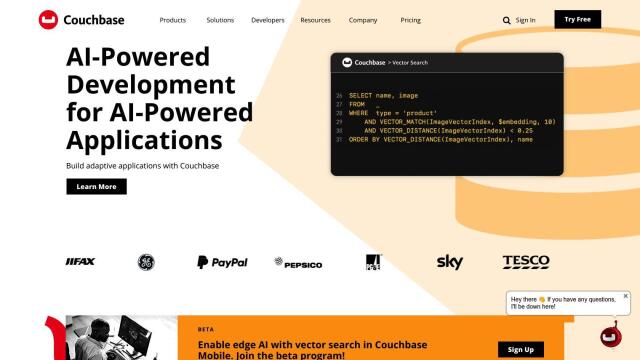

Couchbase

Also worth considering is Couchbase, a flexible NoSQL cloud database platform that accommodates a broad range of data access patterns, including vector search. It combines a high-performance memory-first architecture with AI-assisted coding, making it a good foundation for AI-infused applications. Couchbase has a distributed database architecture designed for modern, user-centric applications and integrates with leading public cloud providers.