Question: How can I build a web scraper that can handle website changes without constant manual updates?

Kadoa

If you're looking for a web scraper that can adapt to changes in websites without you having to update it manually, Kadoa could be a good option. This AI-based platform extracts, transforms and integrates data from multiple sources with a no-code interface. It automatically detects changes in data sources, so your workflows will keep up with changes without manual intervention. Kadoa's features include enterprise-level scalability, integration with other tools through API and prebuilt connectors, and support for industries like finance, ecommerce and media monitoring.

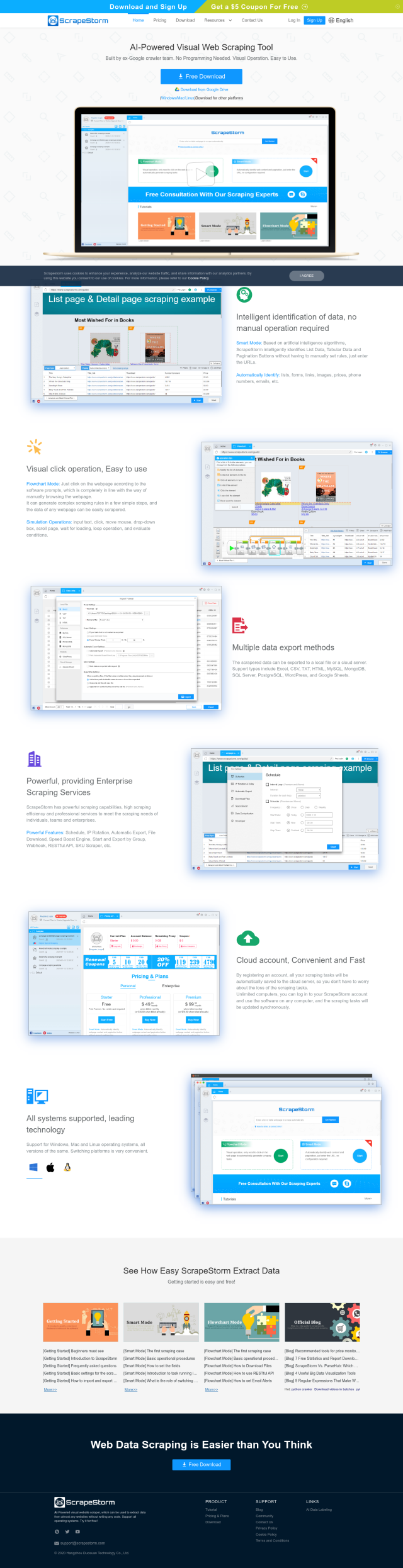

ScrapeStorm

Another powerful option is ScrapeStorm, an AI-powered visual website scraper with no programming required. It uses AI algorithms to automatically identify and extract data and supports multiple data export formats. Options like Smart Mode for automated data extraction, Flowchart Mode for more complex rules, and IP Rotation for bypassing IP address blocking make it a good option for efficient data scraping. ScrapeStorm offers a range of pricing options for individuals and businesses.

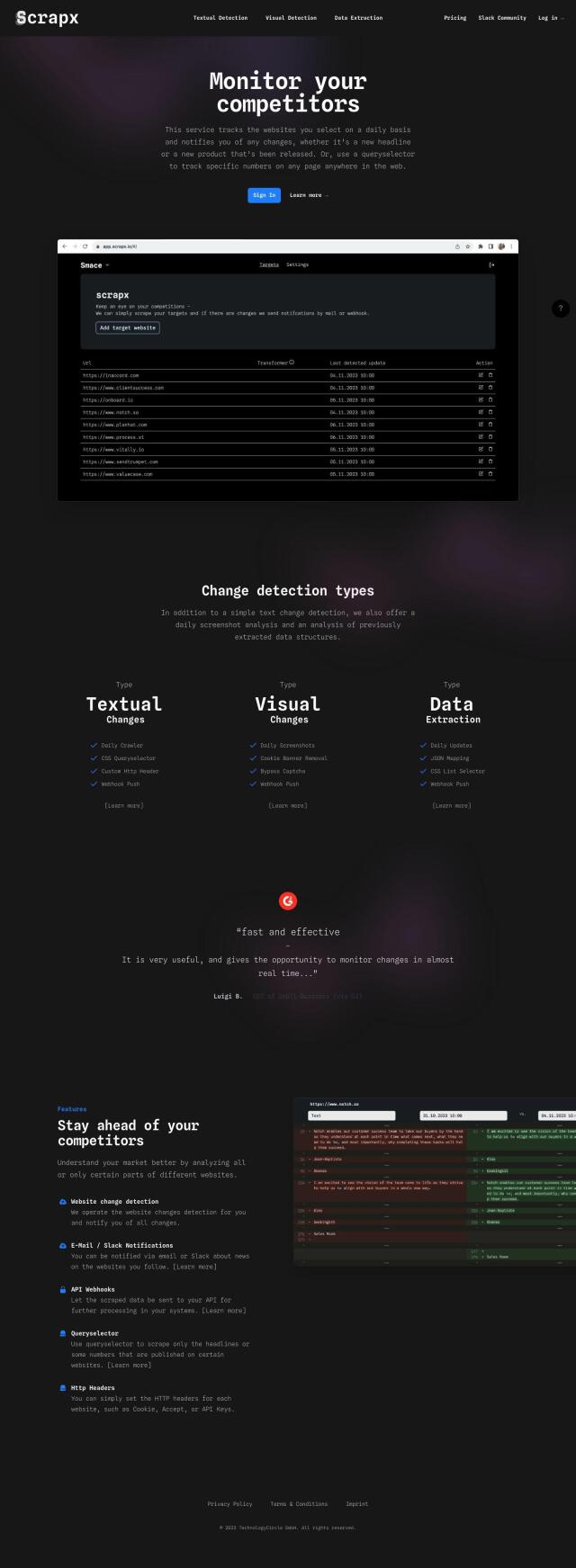

Scrapx

If you want more website monitoring and notification abilities, Scrapx scrapes websites daily and checks for changes to specific sites. It can send notifications by email or Slack and lets you customize HTTP headers and API webhooks for integration with your own systems. Scrapx's features include textual and visual change detection, which can be useful for competitor analysis, content strategy and market research.

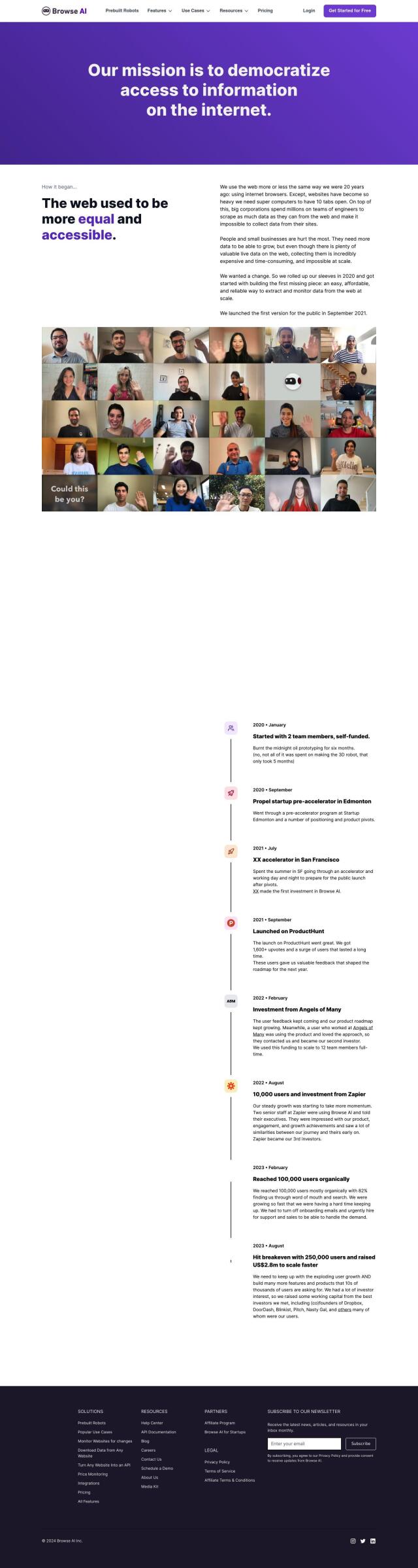

Browse AI

Last, you could try Browse AI, an information retrieval system that can extract specific data from websites without programming. It comes with prebuilt robots for common tasks like company information and product scraping, and it can schedule jobs automatically and send you alerts when changes occur. With support for multiple data sources and formats, Browse AI can help you get the information you need without having to constantly update your setup.