Question: I'm looking for a solution that enables me to build large language models into my own application without setting up complex infrastructure.

ThirdAI

If you want to incorporate large language models into your application without a lot of infrastructure hassle, ThirdAI is a good option. It lets companies use large language models even if they don't have the hardware, like GPUs or TPUs, to run them. The service has modules for document intelligence, customer experience and generative AI, and has shown strong results on some benchmarks. It also offers tiered pricing plans for different usage levels and budgets, so it's a good option if you want to add AI abilities to your app.

Predibase

Another option is Predibase, which lets developers fine-tune and serve large language models. It supports many models and offers low-cost serving infrastructure, including free serverless inference for up to 1 million tokens per day. Predibase also offers enterprise-level security and multiple deployment options, so it's good for companies large or small.

Instill

If you prefer a no-code option, check out Instill. This service makes it easier to deploy and manage AI models so teams can concentrate on iterating use cases instead of infrastructure. Instill supports a variety of AI tasks, including speech responses and webpage summarization, and offers a drag-and-drop interface for custom pipelines. Its tiered pricing plans mean it's available for light use and support.

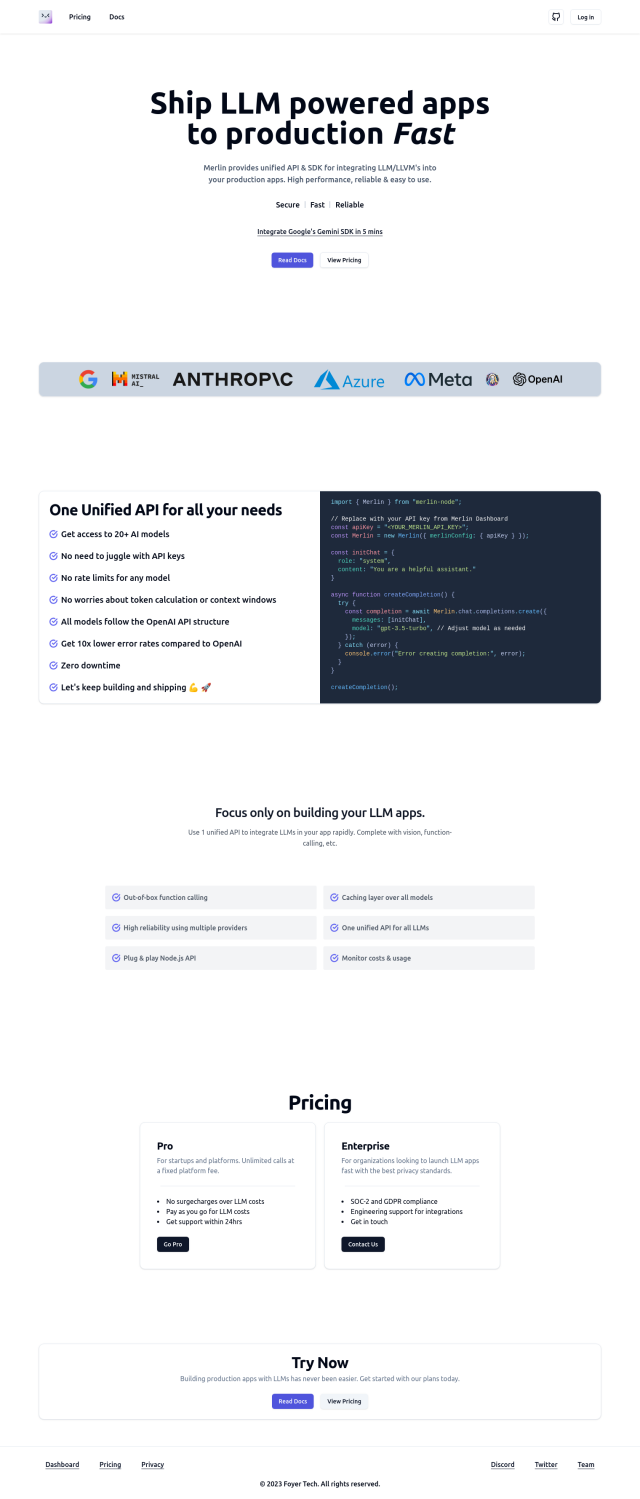

Merlin API

Last, Merlin API is a relatively simple way to get large language models into your apps. It's designed to be easy to use, with a quick onboarding process and minimal coding. It's good for developers and businesses that want to add advanced language processing abilities to their apps without a lot of infrastructure investment.