Question: Can you recommend a tool that accelerates the AI product development workflow, from testing to analytics?

Autoblocks

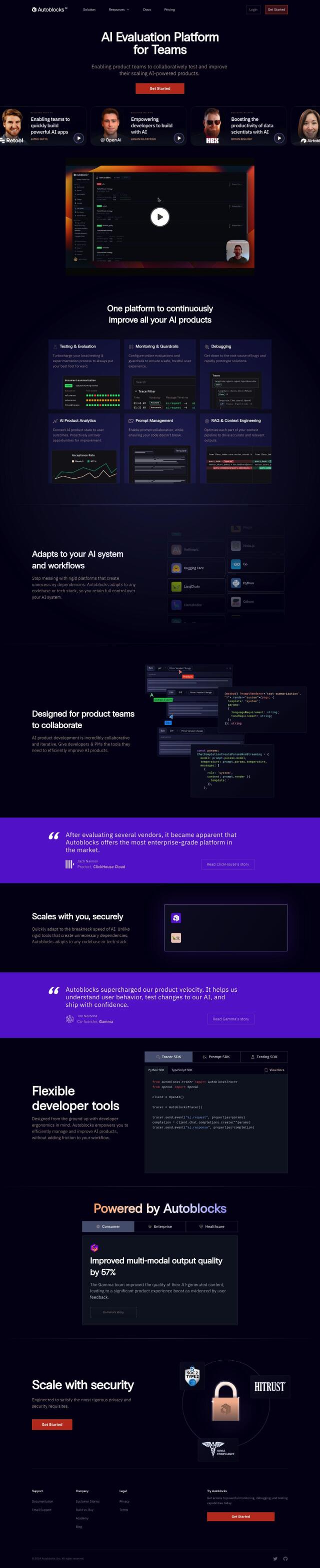

If you're looking for a tool to speed up the AI product development workflow from testing to analytics, Autoblocks is a strong contender for an all-in-one platform. It includes tools like turbocharged local testing, online testing, debugging tools and AI product analytics that integrate with tools like LangChain and OpenAI. Autoblocks is geared for collaborative development, with fast iteration and secure scaling, and is good for a broad range of use cases.

Athina

Another strong contender is Athina, an all-in-one GenAI team platform. It can be used for experimentation, real-time monitoring, cost tracking and customizable alerts. With features like LLM Observability, Experimentation, Analytics and Role-Based Access Controls, Athina lets teams test new prompts in a systematic way, monitor output quality and deploy with confidence. Its tiered pricing is designed to accommodate teams of all sizes.

Freeplay

Freeplay is also worth a look for speeding up development with its collection of tools, including automated batch testing, AI auto-evaluations, human labeling and data analysis. It presents a unified interface for teams and offers deployment options for compliance requirements, making it a good fit for enterprise teams trying to move beyond manual and laborious processes.

Humanloop

If you prefer a collaborative approach, Humanloop is a platform for managing and optimizing LLM applications. It includes a collaborative prompt management interface, an evaluation and monitoring tool and interfaces for connecting private data and fine-tuning models. With support for common LLM providers and integration with Python or TypeScript SDKs, Humanloop is good for product teams and developers trying to increase efficiency and AI reliability.