Question: Can you recommend a platform for fast and efficient AI model training, especially for large language models?

Cerebras

If you need a platform to train AI models, in particular large language models, Cerebras is a good choice. It's got AI supercomputers, model services and cloud services built around its wafer-scale engine (WSE-3) processor with 900,000 AI processing cores. That means a single system can match the performance of a group of servers, speeding up large language model training. Cerebras also offers services like custom generative AI work, cloud services for quick model training, and a pay-by-the-model computing service.

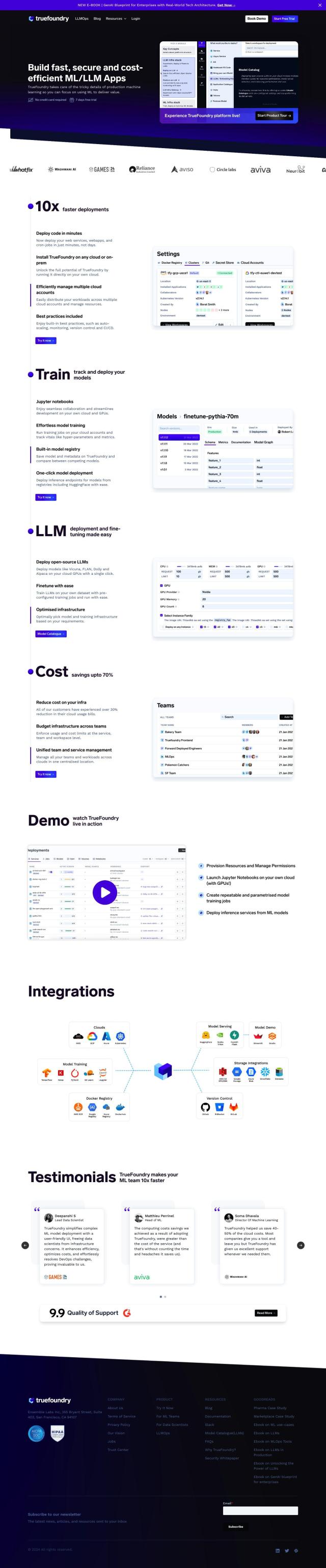

TrueFoundry

Another good option is TrueFoundry. This platform speeds up machine learning and large language model work by using Kubernetes for faster deployment and lower costs. It works in the cloud or on premises and offers features like a model registry, one-click model deployment, cost optimization and a single management system for complex ML and LLM workflows. That makes it a good choice for teams and companies that want to make their AI model training and deployment more efficient.

Together

Together is another option. It's a cloud platform designed for fast and efficient development and deployment of generative AI models. It's got optimizations like Cocktail SGD and FlashAttention 2 to speed up model training and inference. Together supports a variety of models and offers scalable inference and collaborative tools for fine-tuning and model testing. It promises big cost savings compared to other cloud providers, so it's a good option for enterprises.

Predibase

If you need a platform to fine-tune and serve large language models, Predibase is a good option. It lets developers fine-tune open-source LLMs for specific tasks using techniques like quantization and low-rank adaptation. Predibase offers a cost-effective serving infrastructure and supports a variety of models. It charges on a pay-as-you-go pricing model based on model size and dataset used, so it's a good option for large-scale and secure LLM deployment.