Question: I need an AI model that prioritizes safety and responsibility in its responses, can you recommend one?

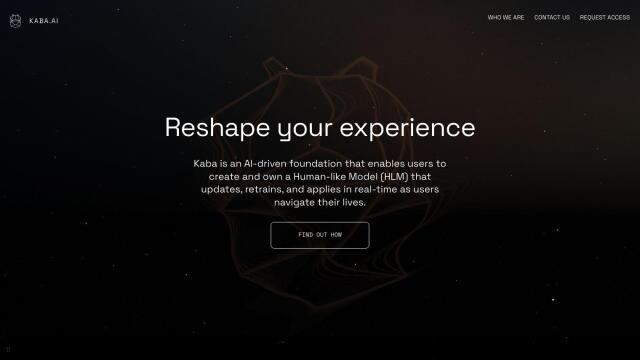

GOODY-2

If you're looking for an AI model that's safe and responsible, GOODY-2 is a great option. This model is trained to recognize potentially controversial or offensive questions and sidestep them, ensuring a safe and respectful conversation. It's enterprise-ready for customer service, paralegal work and back-office operations, and scored a 99.8% on the PRUDE-QA benchmark.

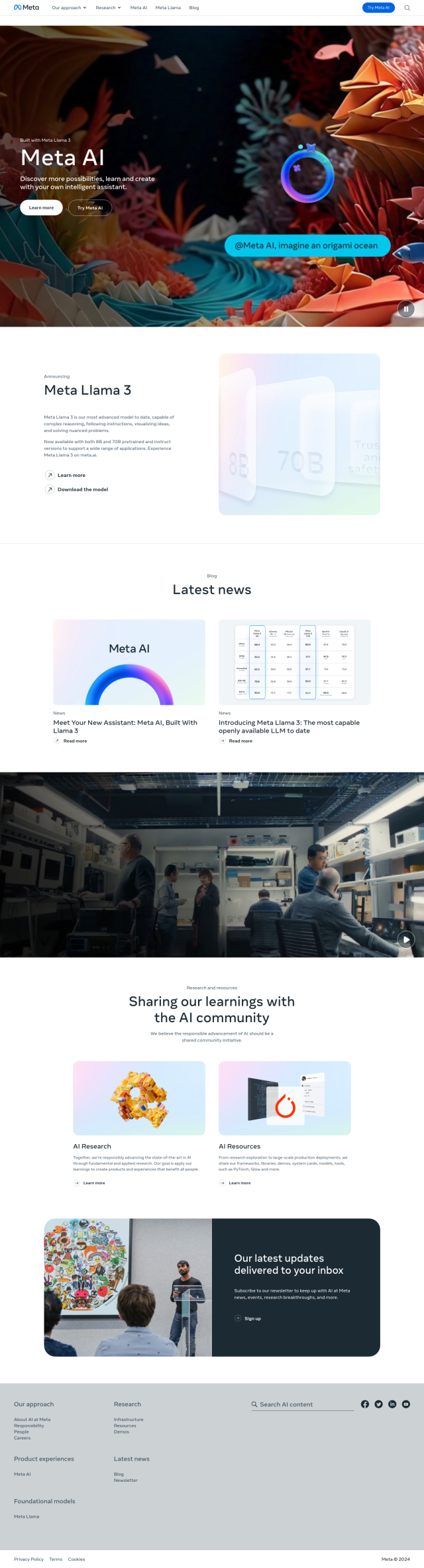

Meta Llama

Another good option is Meta Llama, an open-source project that includes Meta Llama Guard, a suite of tools for trust and safety. The project is designed to encourage responsible use and comes with a Responsible Use Guide, so it's a good option for both research and commercial use. The community approach encourages collaboration and innovation while emphasizing safety and responsible AI development.

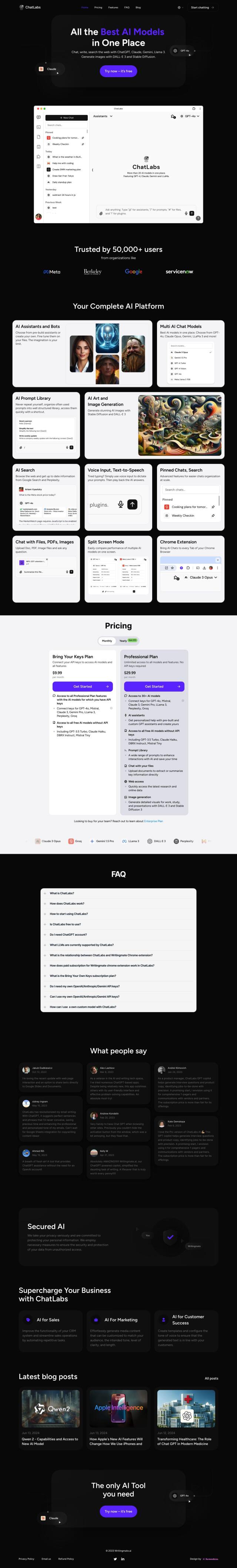

Anthropic

If you're looking for an AI assistant that's safe and has more advanced abilities, check out Anthropic's Claude. It's got abilities like advanced reasoning, multilingual processing and code generation, but Claude also has a safety focus and is designed to be reliable. The platform has enterprise-level security and compliance, too, so it's a good option for businesses that want to use AI responsibly.

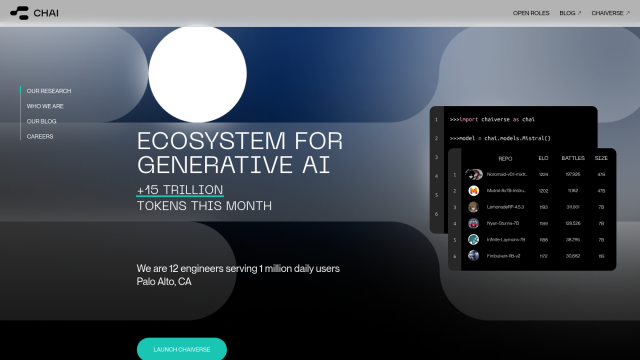

Chai AI

Last, Chai AI offers a community approach to developing advanced language models. It's got a focus on model safety and encourages people to share feedback to help evaluate performance. With a large user base and a mechanism for developers to submit and train models, Chai AI encourages innovation while keeping data safe and promoting responsible AI practices.