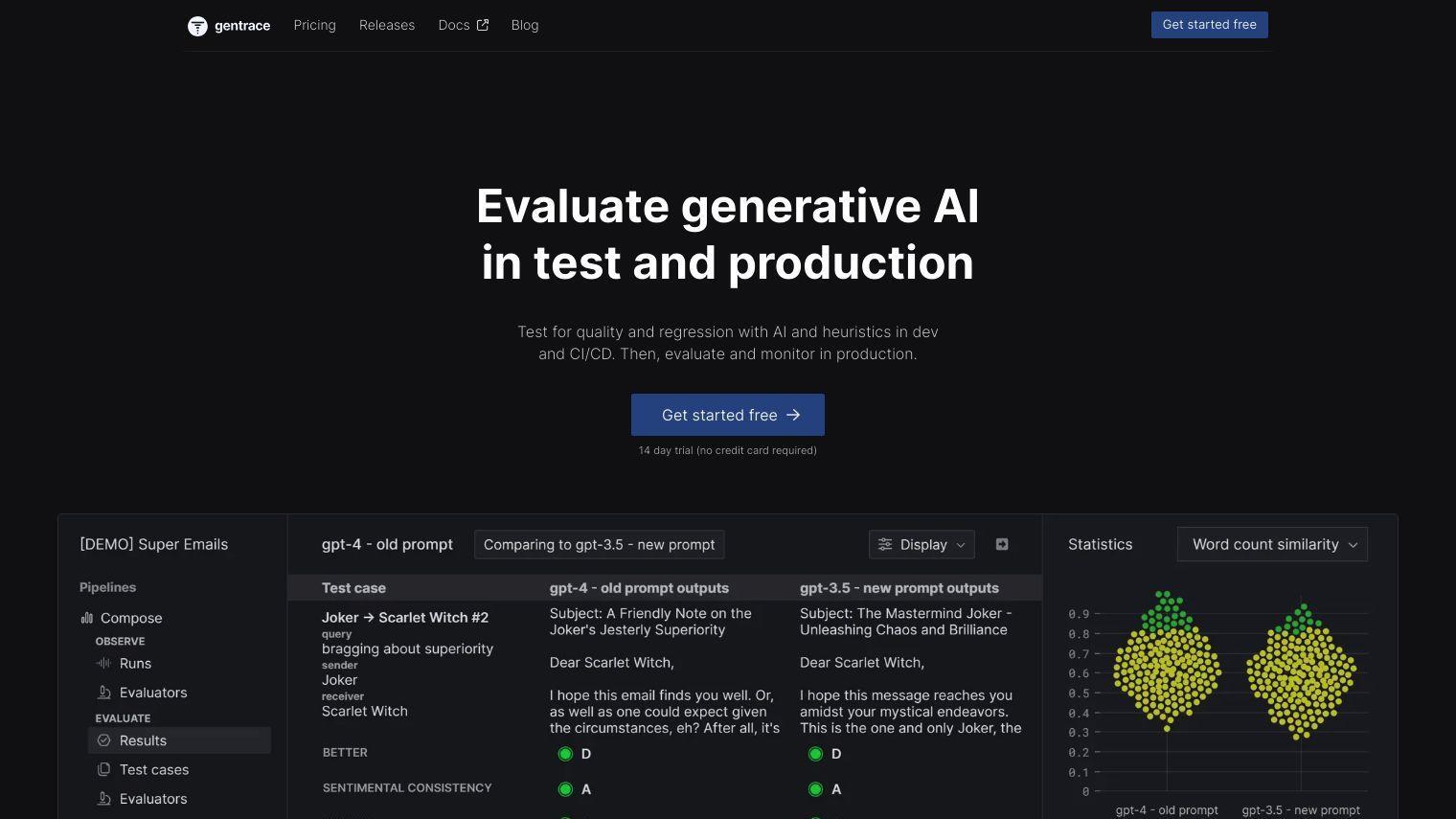

Gentrace is a tool to assess and monitor generative AI quality in both test and production environments. It combines AI, heuristics and human evaluators to spot regressions and hallucinations in your pipeline so you can automate grading and monitor production runs.

Some of the features of Gentrace include:

- Automated Grading: Evaluates and grades AI output in the testing phase using AI, heuristics and human evaluators.

- Factualness Evaluation: Assesses similarity and conciseness of the output.

- Pipeline Runs Monitoring: Tracks speed and cost of production runs.

- Agent and Chain Tracing: Offers visibility into agent and chain traces in test and production environments.

- Processor Functions: Lets you transform and simplify trace data for evaluation.

Gentrace can be used for a variety of tasks, including assessing user queries. For example, you can use it to find the best brunch spots in San Francisco based on a user's query. You can also use it to monitor and grade production runs based on end-user feedback.

Gentrace has a tiered pricing structure, with both Standard and Enterprise options. The Standard option comes with a 14-day free trial and supports unlimited users, while the Enterprise option offers customized trials, compliance reporting and priority support.

Gentrace is in open beta testing, and pricing is subject to change. Customers can contact the sales team for custom agreements and on-premise hosting.

The project offers a lot of documentation to get you started, including instructions on monitoring OpenAI and adding processors to scrub output data. The documentation covers using workflows, concepts, API and SDKs to assess generative AI.

Published on June 14, 2024

Related Questions

Tool Suggestions

Analyzing Gentrace...