Question: I need a tool to evaluate the quality of my generative AI models in production, can you suggest something?

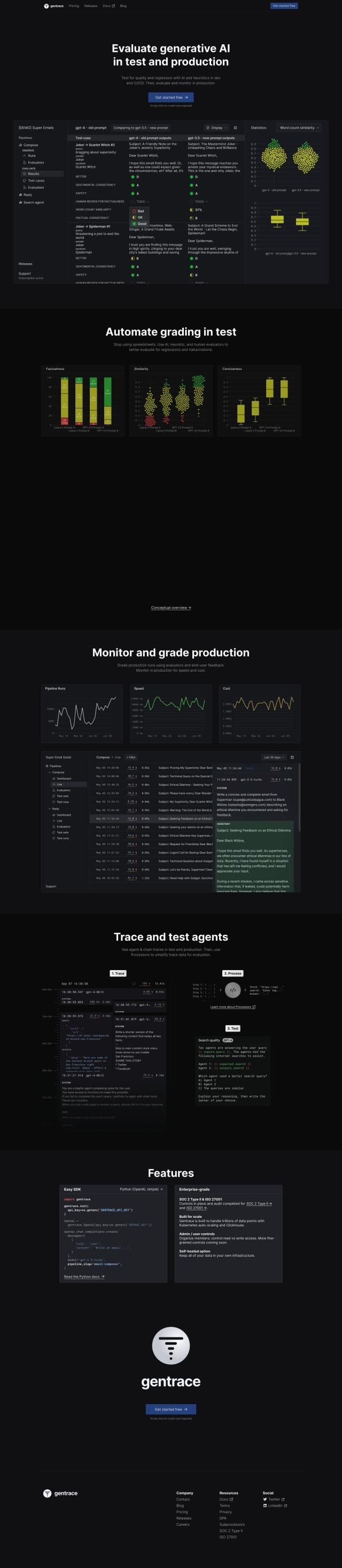

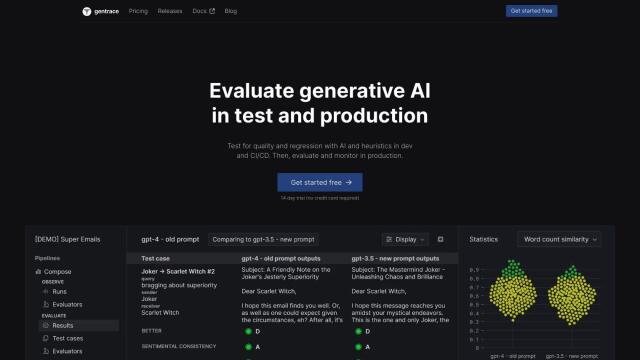

Gentrace

If you're looking for a service to test the quality of your generative AI models in production, Gentrace is a good option. It combines AI, heuristics and human evaluators to spot regressions and hallucinations, with features like automated grading, factualness scoring, pipeline runs monitoring and more. It's got flexible pricing and a 14-day free trial, so it's good for testing user queries and monitoring production runs with end-user feedback.

HoneyHive

Another good option is HoneyHive, a mission-critical AI evaluation, testing and observability service. It's a single environment for collaboration, testing and evaluation, with features like automated CI testing, production pipeline monitoring and distributed tracing. HoneyHive supports more than 100 models, and it's got a free Developer plan for solo developers and researchers, so it's good for a variety of use cases.

Deepchecks

Deepchecks is geared for automating Large Language Model (LLM) app testing, spotting problems like hallucinations and bias. It uses a "Golden Set" approach that combines automated annotation with manual overrides to validate LLM apps. Pricing ranges from free to dedicated plans, so it's good for developers and teams who want to build LLM-based software that's reliable and of high quality.

LastMile AI

Finally, LastMile AI is a full-stack platform to bring generative AI applications to production with confidence. It's got features like Auto-Eval for automated hallucination detection, RAG Debugger for better performance and AIConfig for version control and prompt optimization. The service supports a variety of AI models and comes with a lot of documentation and support, so it's easier to get AI applications into production.