Question: I need a solution to store and analyze large language model requests, can you suggest a tool?

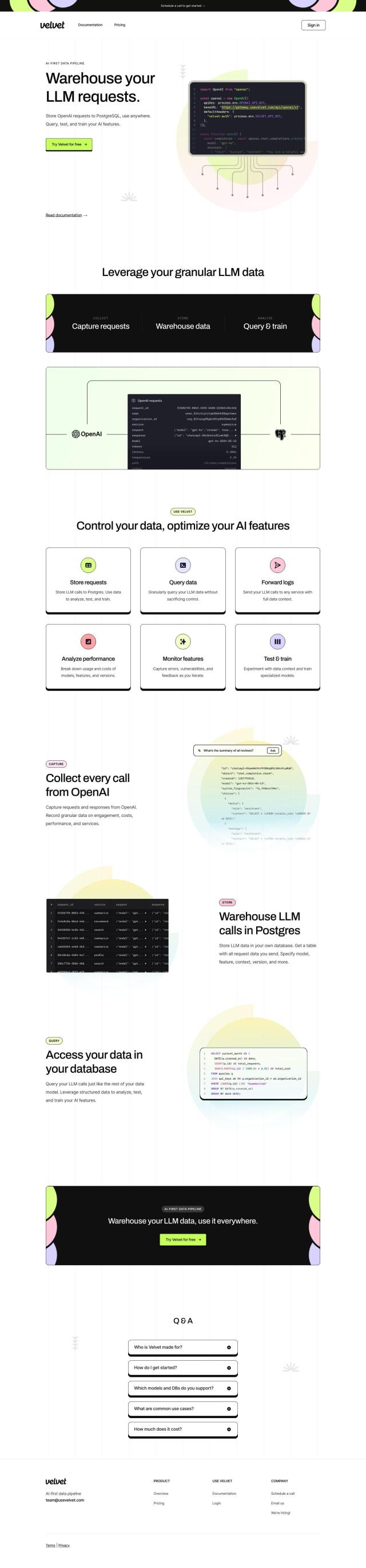

Velvet

If you're looking for a way to store and analyze large language model requests, Velvet is a good choice. It's an AI-first data pipeline for software engineers that lets you capture and store large language model (LLM) requests in PostgreSQL. That lets you query, test and train in detail, with features like forwarding logs, monitoring performance and optimizing model usage. Velvet supports OpenAI and PostgreSQL, with pricing for individual contributors, early-stage startups and high-growth startups.

Langfuse

Another powerful option is Langfuse, an open-source LLM engineering platform for debugging, analyzing and iterating on LLM software. It has a range of features, including tracing, prompt management, evaluation and analytics. Langfuse supports integrations with OpenAI, Langchain and LlamaIndex, and has security certifications like SOC 2 Type II and ISO 27001. It has a range of pricing options, including a free Hobby plan, so it can be used for a variety of needs.

Baseplate

If you need to embed data, store it and manage versions, Baseplate is worth a look. It combines multiple data types into a single hybrid database, letting you get multimodal LLM responses. Baseplate's features are arranged into four phases: Connect, Configure, Build, and Deploy, which makes it easier to manage data for LLM software and high-performance retrieval pipelines.

Humanloop

Last, Humanloop is a collaborative platform for managing and optimizing LLM software. It's designed to address problems like suboptimal workflows and manual evaluation, with a collaborative prompt management system, evaluation suite and customization tools. Humanloop supports common LLM providers and offers SDKs for integration, making it a good option for product teams and developers looking to improve productivity and collaboration.