Question: I'm looking for a way to simplify my large language model applications by accessing multiple providers through a single API key.

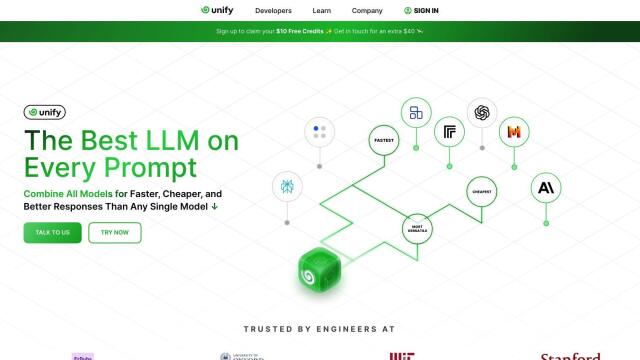

Unify

If you want to cut down on the complexity of your large language model applications by using a single API key to tap into multiple providers, Unify is a good choice. This service optimizes your LLM apps by sending prompts to the best available endpoint on multiple providers. It uses a unified API, customizable routing based on cost, latency and output speed constraints, and live benchmarks to pick the fastest provider. That can mean better accuracy, more flexibility and better resource usage with a credits system that pays only what the endpoint providers charge.

Kolank

Another good option is Kolank, which provides a single API and browser interface to query multiple LLMs without having to obtain separate access and pay for them. Kolank's routing algorithm evaluates each query to figure out which model will return the best response in the shortest time, minimizing latency and improving reliability. The service also offers resilience by rerouting queries if a model is down or slow, so it's a good option for developers who want to use multiple LLMs without a lot of hassle.

Keywords AI

If you want a more complete platform, Keywords AI provides a unified DevOps environment for building, deploying and monitoring LLM-based AI applications. It has a single API endpoint for multiple models, lets you make multiple calls in parallel without waiting for responses, integrates with OpenAI APIs and includes tools for rapid development and performance monitoring. It's designed to let developers concentrate on building products, not infrastructure, so it's a good option for AI startups.