Question: I'm looking for a service that provides on-demand access to machine learning-capable computing resources without requiring a lot of setup, do you know of any options?

RunPod

One good option is RunPod. It's a cloud platform for training and running AI models. You can easily spin up GPU pods and pick from a range of GPUs. RunPod also offers serverless ML inference with autoscaling and job queuing so you can run your models with minimal setup. The service comes with more than 50 preconfigured templates and a CLI tool for easy provisioning and deployment. It charges by the hour, starting at $0.39, with no egress or ingress fees.

Modelbit

Another good option is Modelbit, an ML engineering platform that lets you deploy your own and open-source ML models to autoscaling infrastructure. Modelbit comes with built-in MLOps tools and supports a wide range of ML models, including computer vision and language models. It charges for on-demand computing at $0.15/CPU minute and $0.65/GPU minute, so it's a good option if you want flexibility for your ML workloads.

Anyscale

If you want a platform that works on the cloud or on-premise, check out Anyscale. It offers workload scheduling, cloud flexibility, and smart instance management. Anyscale is based on the open-source Ray framework and supports a wide range of AI models, with reported cost savings of up to 50% on spot instances. It also offers native integration with popular IDEs and streamlined workflows for running and testing code at scale.

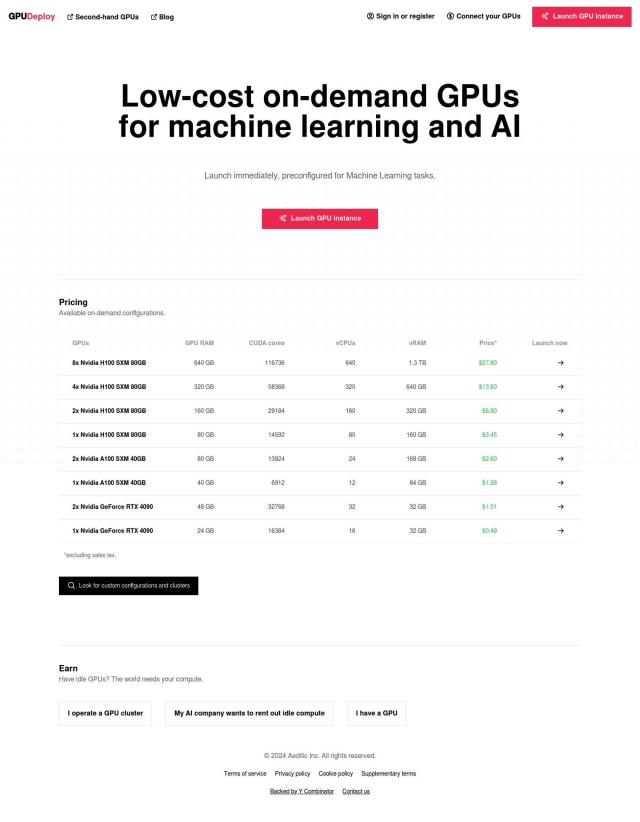

GPUDeploy

Last, GPUDeploy offers on-demand, pay-by-the-minute GPU instances optimized for machine learning and AI workloads. It offers a range of preconfigured instances, including combinations of different GPUs, memory and vCPUs. The service is good for developers and researchers who need to quickly get access to ML-capable hardware without much setup hassle.