Question: Is there a no-code platform that allows data scientists to easily understand data quality, label datasets, and evaluate models based on various metrics?

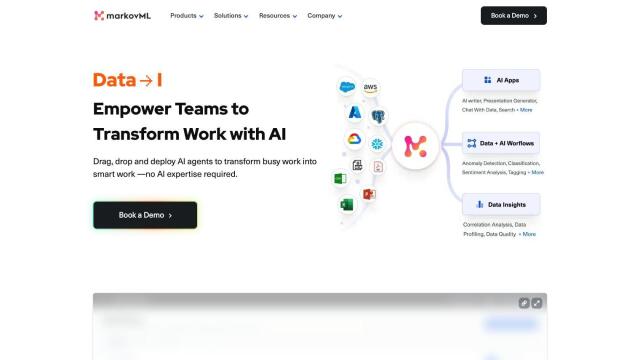

MarkovML

For a no-code platform that makes it easy for data scientists to assess data quality, label datasets and evaluate models with different metrics, MarkovML is a good choice. It combines AI-infused data analysis, no-code app building and automated data workflows so you can quickly get a better handle on data quality, label datasets and evaluate models. It also has features like AI-powered data insights and collaboration tools so data scientists can work efficiently on these tasks.

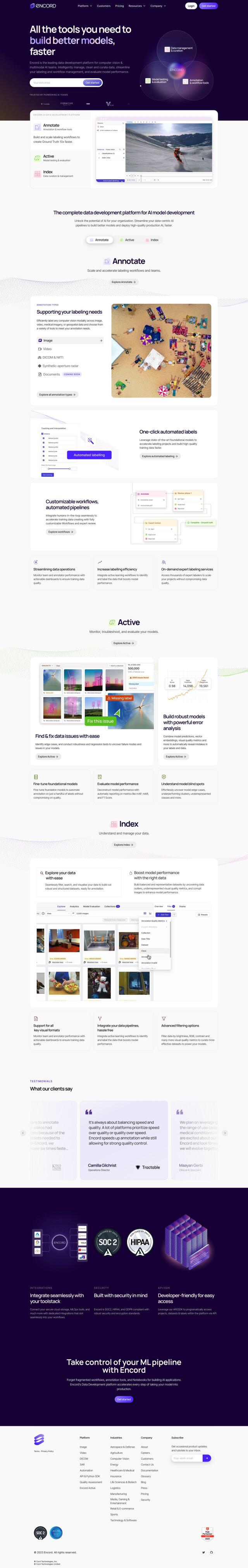

Encord

Another good option is Encord, which offers a full-stack data development environment for building and running AI applications, including tools for ingesting data, cleaning it, curating it, auto-labeling it and evaluating model performance. With tools like Annotate for auto-labeling, Active for monitoring model performance and Index for data management, Encord streamlines the AI development life cycle, ensuring high-quality training data and better model performance.

SuperAnnotate

SuperAnnotate is another good option, an end-to-end enterprise platform for training, evaluating and deploying AI models. It can handle data imported from local and cloud storage, customizable interfaces for different GenAI tasks, and sophisticated AI, QA and project management tools. The platform's global marketplace for vetted annotation teams and detailed data insights make it a powerful option for creating high-quality datasets and evaluating model performance.

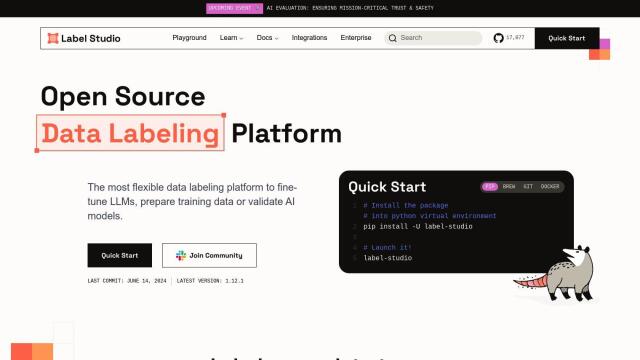

Label Studio

If you want a flexible, open-source option, Label Studio is a good option, a data labeling tool that can handle different types of data and integrate with cloud storage systems. Its features include ML-assisted labeling, customizable layouts and advanced filtering, so data scientists can create high-quality training data. Its community-driven nature and wealth of support resources make it a popular option among data scientists.