Question: How can I improve the observability of my LLM projects and get better insights into their performance?

Athina

Athina is a good option for enterprise GenAI teams, a full-stack platform for experimenting, measuring and optimizing AI applications. Among its features are real-time monitoring, cost tracking, customizable alerts and LLM Observability. It supports several frameworks and offers flexible pricing, so it should accommodate teams large and small.

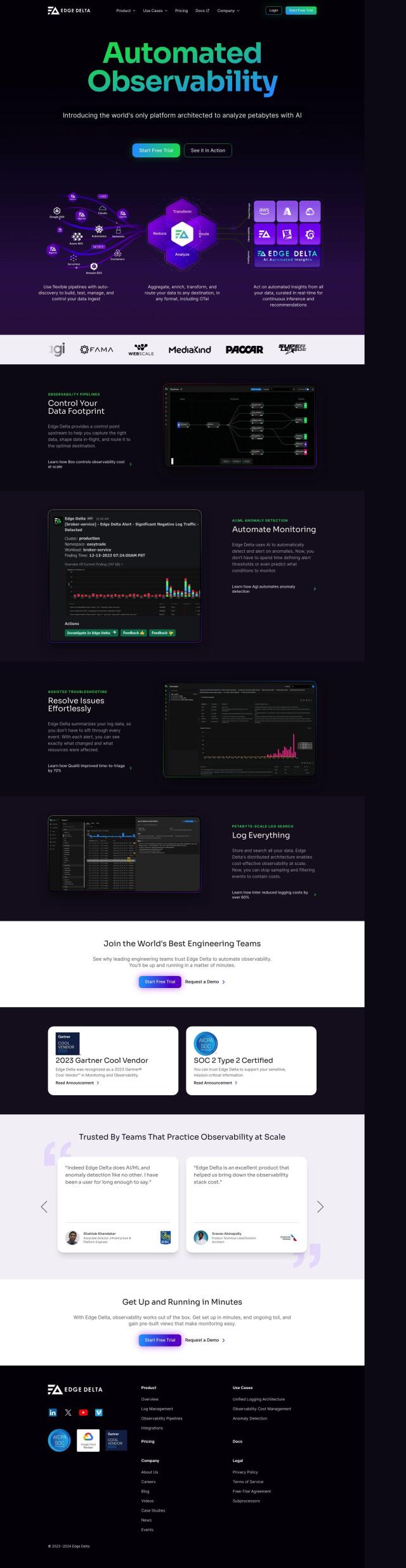

Edge Delta

Another powerful option is Edge Delta, an automated observability platform that monitors services, spots problems and helps you figure out what's causing them through AI-infused data analysis. With features like automated real-time insights, AI/ML anomaly detection and an interface designed to be easy to use, Edge Delta can handle high-performance log data queries and is designed to scale well.

HoneyHive

If you want to concentrate on LLMs, HoneyHive is a mission-critical AI evaluation, testing and observability platform. It includes automated CI testing, production pipeline monitoring, dataset curation and prompt management. HoneyHive also integrates with popular GPU clouds and offers several pricing tiers, including a free Developer plan.

Langfuse

Last, Langfuse is an open-source platform for LLM engineering, with a range of tools for debugging, analysis and iteration. Langfuse offers tracing, prompt management, evaluation and analytics features, with support for multiple integrations and security certifications like SOC 2 Type II and ISO 27001.