Question: Is there a platform that offers a free serverless inference option for prototyping and evaluating language models?

Predibase

If you're looking for a platform that offers a free serverless inference option for prototyping and evaluating language models, Predibase is an excellent choice. Predibase allows developers to fine-tune and serve large language models in a cost-effective manner, providing free serverless inference for up to 1 million tokens per day. It supports a variety of models and operates on a pay-as-you-go pricing model.

AIML API

Another great option is the AIML API, which offers serverless inference and access to over 100 AI models through a single API. With a simple and predictable pricing model based on token usage, starting at $0.45 per million tokens, it's highly scalable and reliable, making it ideal for advanced machine learning projects.

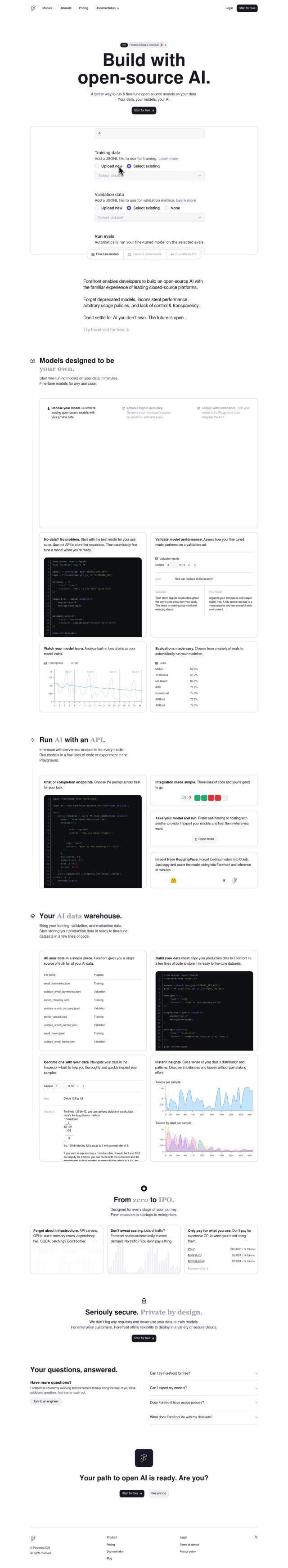

Forefront

For those wanting to fine-tune open-source language models quickly and easily, Forefront provides model adaptation in minutes without complex infrastructure setup. It includes serverless endpoints for easy integration and offers flexible deployment options, including a free trial to try out the platform before committing.