Question: Is there a tool that allows me to benchmark and evaluate large language model applications with different data storage providers?

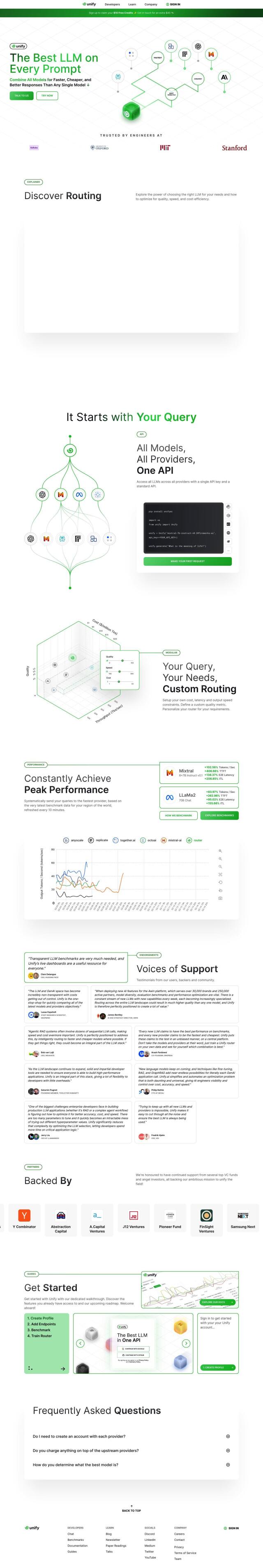

Unify

Unify is a dynamic routing service that can optimize LLM apps by sending prompts to the most suitable endpoint on a variety of providers with a single API key. It's got live benchmarks, cost, latency and output speed limits, and lets you set your own quality metrics and limits. That can improve quality, increase flexibility, optimize usage of computing resources and speed up development.

LlamaIndex

Another good choice is LlamaIndex, a data framework that lets you connect your own data sources to LLMs for use in your production apps. It supports more than 160 data sources and 40 storage providers, so it should work for lots of use cases like financial services analysis and chatbots. It's got tools for loading data, indexing it, querying it and evaluating performance, and it's got a free tier and a paid tier with a more powerful enterprise option.

BenchLLM

For evaluating model quality and monitoring for regressions, BenchLLM offers an information retrieval system with automated, interactive or custom evaluation methods. It can integrate with OpenAI and other APIs to build test suites and generate quality reports, so it's a good choice for developers who need to keep their AI apps up to date and running well. It can also be integrated with CI/CD pipelines for continuous monitoring.