Question: Do you know of an AI platform that prioritizes transparency and responsible AI use, with a focus on security and ethics?

OneTrust

If you're looking for an AI platform that puts a premium on transparency and responsible AI use, with a focus on security and ethics, OneTrust is a good choice. It's a trust intelligence platform that spans privacy, data governance, security, ethics and ESG for the enterprise. It includes features like data discovery, risk assessment, AI governance and security and privacy compliance automation. The platform can help companies build trust with stakeholders and get a better view of risk across third-party lifecycles.

Google DeepMind

Another contender is Google DeepMind, a research lab that's working on responsible AI systems. It's got technology like Gemini models that can process and understand text, code, images, audio and video, and that can reason across different modalities. DeepMind is working on responsibility and safety in AI development with a framework to assess and mitigate future risks. Its tools like Google AI Studio and Google Cloud Vertex AI let developers create new products and services that use these AI models responsibly.

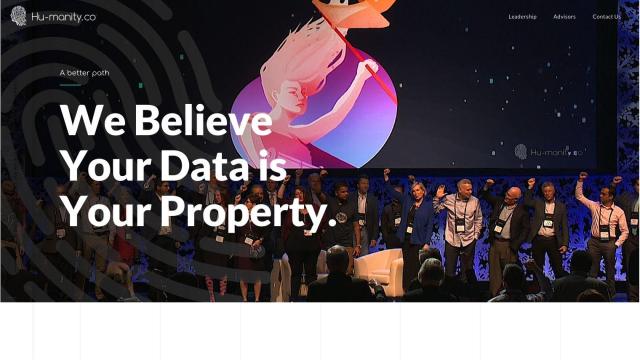

Hu-manity.co

If you want to improve privacy and transparency in digital commerce, Hu-manity.co has a platform that lets companies build trust and authenticity across digital channels. It's got features like data privacy contracting, data consumption policy digitization and identity system integration. The technology is designed to comply with regulations like GDPR and CCPA, and it's based on the idea that individuals have property rights over their own data.

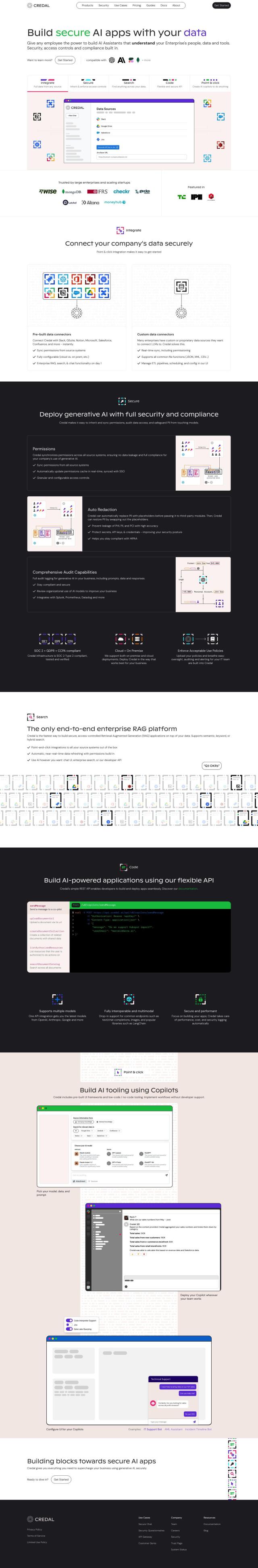

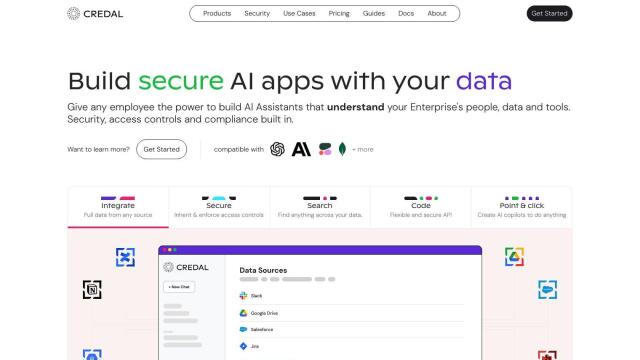

Credal

Last, Credal has a platform for building secure AI applications using a company's own data. It's got built-in security, access controls and compliance features, and point-and-click integration with a variety of data sources. Credal's Retrieval Augmented Generation (RAG) technology ensures the development of secure and enterprise-ready AI applications, making it a good option for businesses that want to use AI without security and compliance worries.