Question: I'm looking for a system that can perform AI inference directly on devices to protect user data and reduce latency.

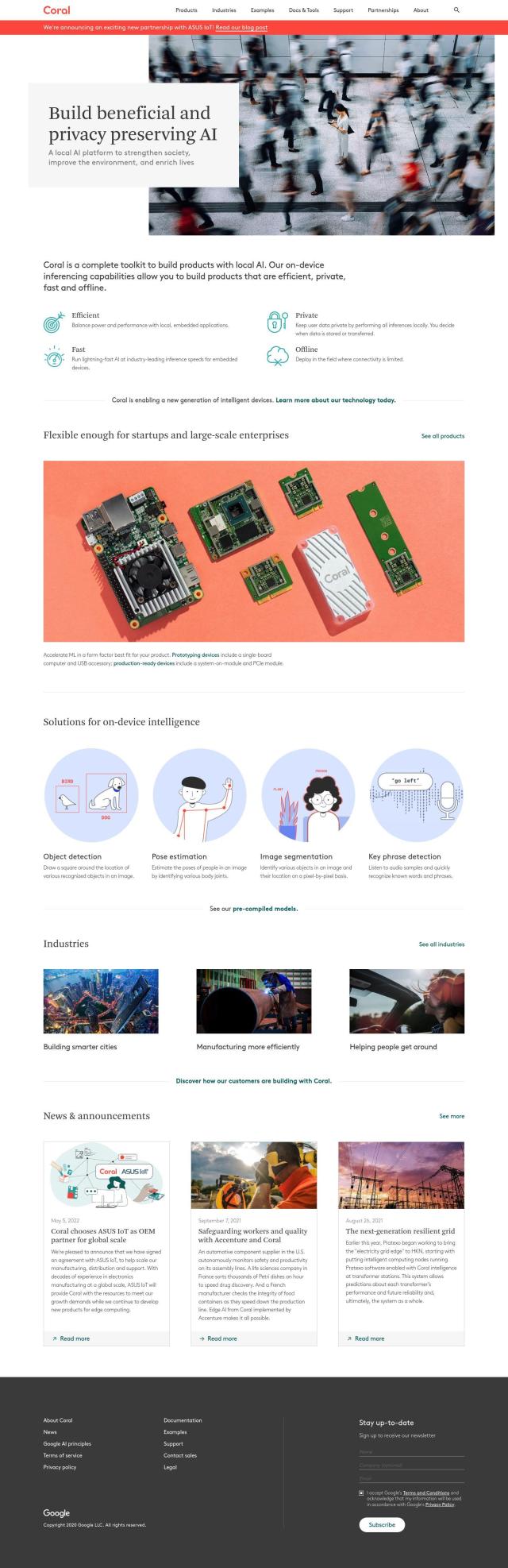

Coral

If you're looking for a system that runs AI inference directly on the device to protect user data and lower latency, Coral is a good choice. Coral is a local AI platform that enables on-device inference across many industries, providing fast, private and efficient AI processing. It supports popular frameworks like TensorFlow Lite and runs on Debian Linux, macOS and Windows 10. This can help you address data privacy and latency challenges with trusted and performant AI.

Numenta

For companies that want to run big AI models on CPUs, Numenta has a good answer. The NuPIC system can optimize performance in real time and support multi-tenancy so you can run hundreds of models on a single server. The system is good for gaming and customer support, delivering high performance and scalability on CPU-only systems while keeping data private and in your control.

Groq

Last, Groq offers a hardware and software platform for high-performance, high-quality and energy-efficient AI compute. Its LPU Inference Engine can run in the cloud or on-premises, so customers can use it for fast AI inference. The platform is optimized for low power usage, which can help companies cut energy costs while meeting their AI compute needs.