Question: Can you recommend a platform that accelerates the finetuning process of Stable Diffusion models?

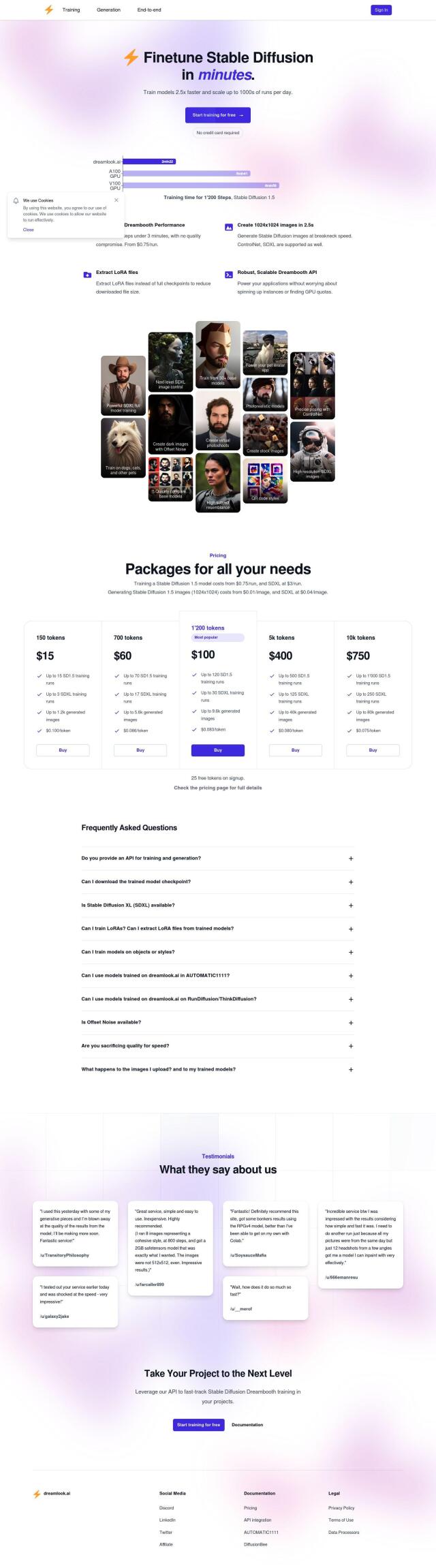

DreamLook

If you want a platform to speed up fine-tuning of Stable Diffusion models, DreamLook is a good option. It lets you train models 2.5x faster and run thousands of jobs a day. Among its features are the best Dreambooth performance, fast image generation, and a powerful API. The system can extract LoRA files for smaller downloaded checkpoint files and offers several pricing tiers starting at $0.75 per run for training the Stable Diffusion 1.5 model and $0.01 per image for generation.

TuneMyAI

Another option is TuneMyAI, which automates finetuning and deployment of Stable Diffusion models. It uses NVIDIA A100 GPUs to finetune models in less than 20 minutes and offers features like fast finetuning, Hugging Face integration and support for multiple class types. The Standard plan costs $2.50 per finetune, so it's a good option for developers who need to speed up their ML model deployment workflow rapidly.

Fireworks

Fireworks is another option that can help you fine-tune and deploy your models quickly. It supports advanced image models like Stable Diffusion 3 and SDXL, and offers scalable options for small and large businesses. With features like optimized inference powered by FireAttention and flexible model deployment, Fireworks is a good option for a broad range of use cases and offers three pricing tiers to match your needs.

Forefront

For those who need a platform to adapt and deploy open-source language models, Forefront is a good option. It offers model adaptation in minutes, serverless endpoints for easy integration, and strong privacy and security controls. The platform is good for research, startups and enterprises that want to optimize models for their own data sets, and pricing is based on model usage.