Salad

If you're looking for a replacement for Aethir, Salad is a cloud-based service for deploying and managing AI/ML production models at scale. It offers a low-cost way to tap into thousands of consumer GPUs around the world, with features like scalability, a global edge network, and SOC2 certification. Salad's pricing starts at $0.02/hour for GTX 1650 GPUs, so it's a good option for AI workloads.

Cerebrium

Another good option is Cerebrium, a serverless GPU infrastructure service for training and deploying machine learning models. It's notable for its pay-per-use pricing that can be more economical. Cerebrium also offers a broad range of features, including GPU variety, infrastructure as code, and real-time monitoring, so engineers can easily deploy and scale their models.

RunPod

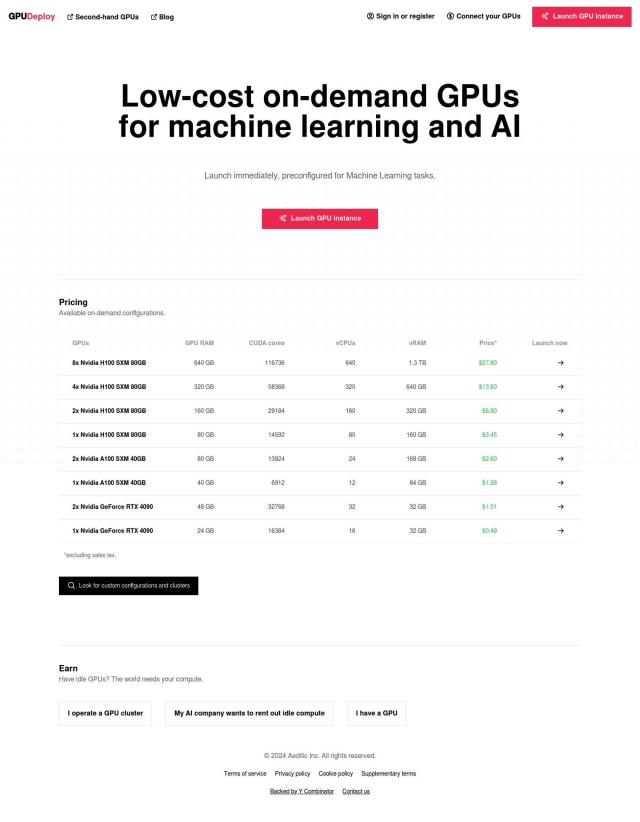

If you're looking for a globally distributed GPU cloud, check out RunPod. The service lets you deploy GPU pods immediately with a range of GPU options, serverless ML inference and support for more than 50 preconfigured templates for popular frameworks. RunPod's pricing is based on the type of GPU instance and usage, with costs ranging from $0.39 to $4.89 per hour.

NVIDIA

And of course, NVIDIA has a range of options to accelerate AI adoption across many industries. With products like NVIDIA Omniverse, RTX AI Toolkit and GeForce RTX GPUs, NVIDIA has a full suite for AI application development and deployment, so it's a good option for AI and high-performance computing work.