Question: I'm looking for a solution that reduces latency and network usage by running computations locally on edge devices.

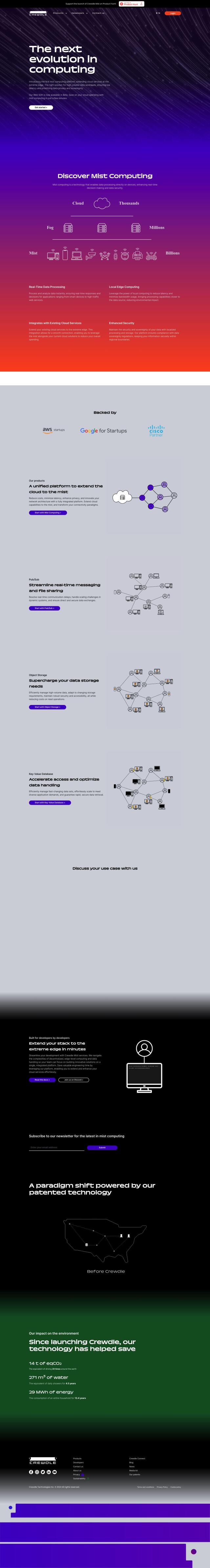

Crewdle

If you're trying to cut latency and network usage by running your computations on edge devices, Crewdle could be a good option. This mist computing platform extends cloud services to the edge, where data can be processed and analyzed in real time. It reduces latency and network usage by processing and storing data in the edge, while also protecting data security and sovereignty. Crewdle also can connect to existing cloud services, including Pub/Sub for real-time messaging and Object Storage for data storage.

ThirdAI

If you're interested in language models and other AI technology, check out ThirdAI. The platform gives you access to large language models and other AI technology without needing special hardware. It includes document intelligence, customer experience enhancements and generative AI for summarizing documents. ThirdAI's platform performs well on benchmark tests like sentiment analysis and information retrieval, with higher accuracy and lower latency than conventional methods.

Groq

Last, Groq offers a hardware and software platform for AI compute that's fast and energy efficient. Its LPU Inference Engine can run in the cloud or on your own premises for flexibility in scaling up or down, so it's good for anyone who needs fast AI inference in different settings. Groq's platform is optimized for low power, which means lower energy consumption and better overall AI processing.